Vengance_01

Supreme [H]ardness

- Joined

- Dec 23, 2001

- Messages

- 7,220

wait for a sale.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

I have all of those in my PC. I don't have the testing suite to have benchmark numbers, but I can find out how it feels to play on each.Wil be interesting to see how it does on regular sata ssd, HDD even, with gdeflate GPU decompressio you can get 1.5 GB-s effective bandwith from regular HDDs which is not too shabby at all

Which could be here the most relevant metric, not sure how well (even 1% low) benchmark will capture this.how it feels

With the way this game plays any stutter or interruption when rifts appear will be the most important from a gameplay perspective. If there is barely a noticeable interruption I think it will be acceptable.Which could be here the most relevant metric, not sure how well (even 1% low) benchmark will capture this.

Could be the last minute addition of ray traced ambient occlusion that is causing issues. Correct me if I'm wrong, but I don't think there are any "AMD sponsored" games that include the effect, and it may be due to these technical issues Nixxes is referring to.AMD driver issue no doubt since this is the first game supporting RT with DirectStorage 1.2 at the same time.

Portal Prelude RTX doesn't count since it's a mod and a converted engine to Vulkan no less.

4PM UK time for unlock,bgonna finish work earlier to get home at prime time!

Ratchet & Clank: Rift Apart- Launch Trailer | PC Games

Ratchet and Clank: Rift Apart- PC Max vs PS5 vs PC Very Low- First Look

AMD driver issue no doubt since this is the first game supporting RT with DirectStorage 1.2 at the same time

Some sequence were made on different CPU-system overall as well.Also, the difference between SATA and NVME are bigger than I expected.

I did think this, I also had the Nvidia geforce experience enabled but not its overlay, as I use it to record game footage. But it seemed to be an issue with it enabled or off.robbiekhan , have you tried not using the overlay to see if it still crashes then? Sometimes the rtss overlay can cause them.

There are defo crashes, I had a couple already and I'm 2% into the story. Will write up a proper review shortly as am posting it on another board too.

Edit*

Just had a quick go and immediate things I noticed in settings is that sometime sthe settings don't remember. Also Full Screen is not Full Screen, it's Windowed Full Screen. Windowed mode is just labelled as windowed. So they got this naming convention wrong. I only noticed because my DLDSR res was not available until I set it to Exclusive Full Screen

Also I had to quit and load the game twice another time for it to load the game using the DSR resolution and other settings else it loaded at native 3440x1440.

The advanced graphics presets toggle only goes up to Very High, which is strange because various GFX options go to Ultra, so by selecting the max preset of Very HIgh, you aren't actually maxing out the settings, so have to then manually increase those that do go to Ultra. unlike Cyberpunk, there isn't an indicator to tell you how many more settings beyond ultra each option has, which is annoying but hey ho.

Main settings I'm using:

View attachment 585754

View attachment 585757

View attachment 585760

With DLSS set to Quality at 5160x160 on a 4090 and 12700KF I was seeing teh framerate bounce from above 60fps at a few times to 109fps other times, even though the scene hasn't changed which is weird, until I glanced at the RTSS overlay and saw that a lot of the time the GPU usage, even at 5160x160 was dropping below 90% whilst the CPU usage remained in the 40% range. So it looks like there's an optimisation issue with CPU utilisation resulting in the GPU having to wait around. It's not a CPU bottleneck issue because I am at 5160x160, and a 12700KF is more than beefy enough to handle anything.

I suspect we will be seeing optimisation patches fairly soon as also noticed numerous instances of frame drops and inconsistent performance when enabling frame generaton.

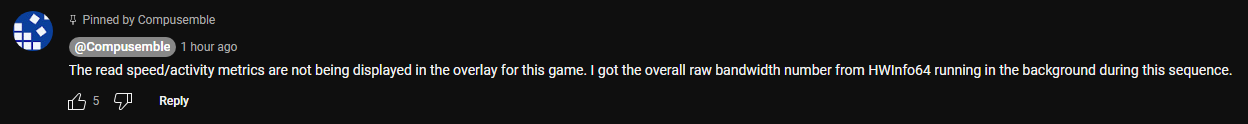

Screens from those inconsistent notes above, keep an eye on the RTSS overlays... All of these are with Frame Gen off except the last one. I should add that changing to 3440x1440 (native res) didn't really change the framerate much or the frametime behaviour. A definite bug it would seem, but I will do a system reboot shortly just to be double sure.

What is going on here???

View attachment 585755

Why is this scene 68 fps??? Oh yeah that's why, the GPU is at 71%.,...

View attachment 585756

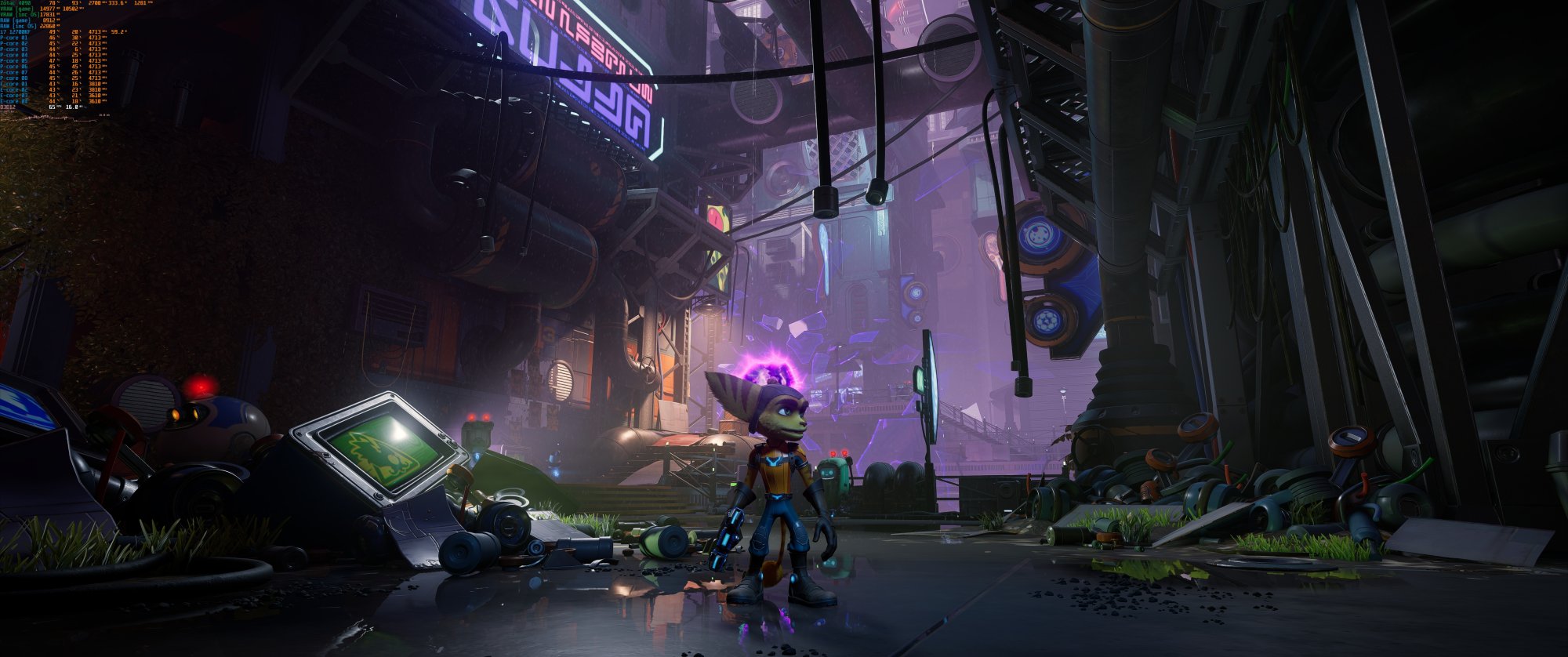

But when it is working fine it's very good, look at the CPU use below, same as above, the GPU is also 98% which is where it should be:

View attachment 585762

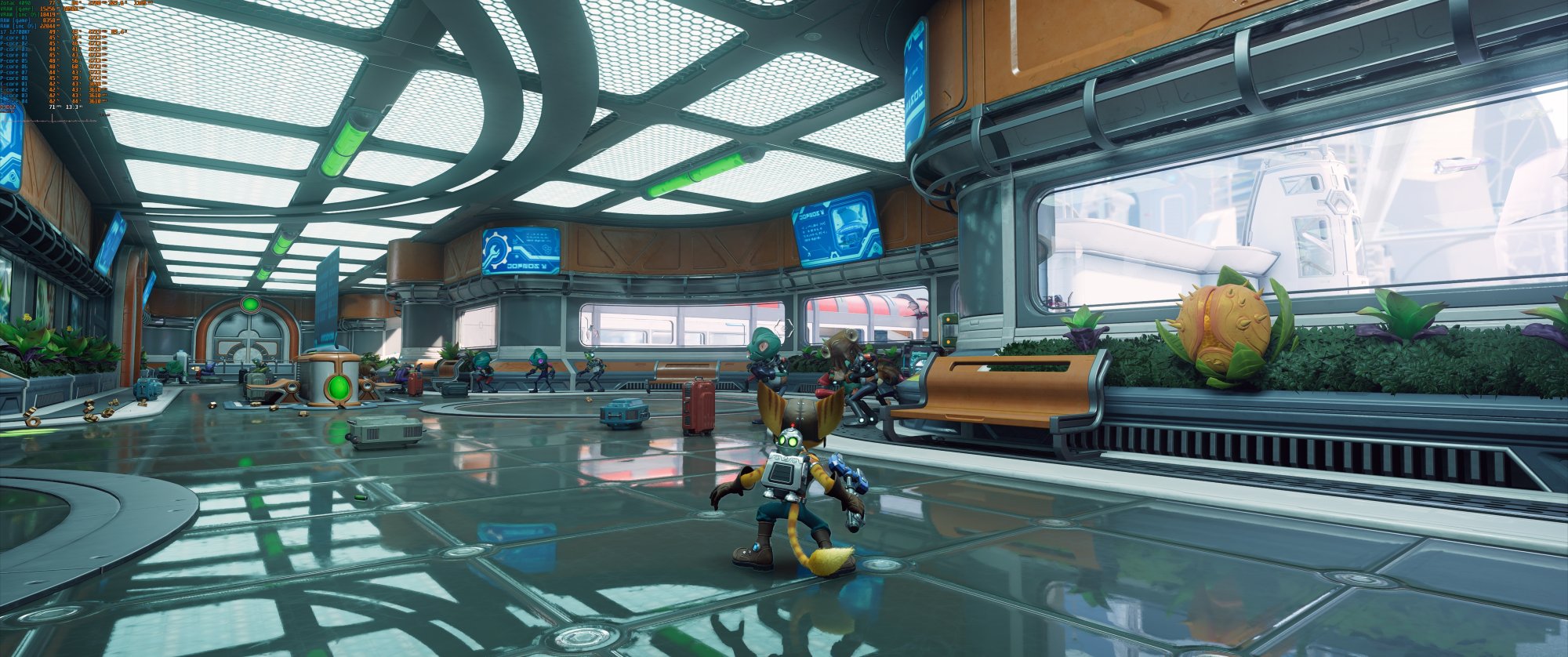

But then walk nearby somewhere and suddenly the GPU use drops again resulting in:

View attachment 585759

This scene was an eye opener, 15% CPU utilisation??? Multiple physical cores are either idling or parked...

View attachment 585763

And here we are back to normal, remember DLSS Quality only at 5120x2160 and when it's like this it runs so smooth:

View attachment 585758

Here the GPU utilisation dropped randomly again:

View attachment 585761

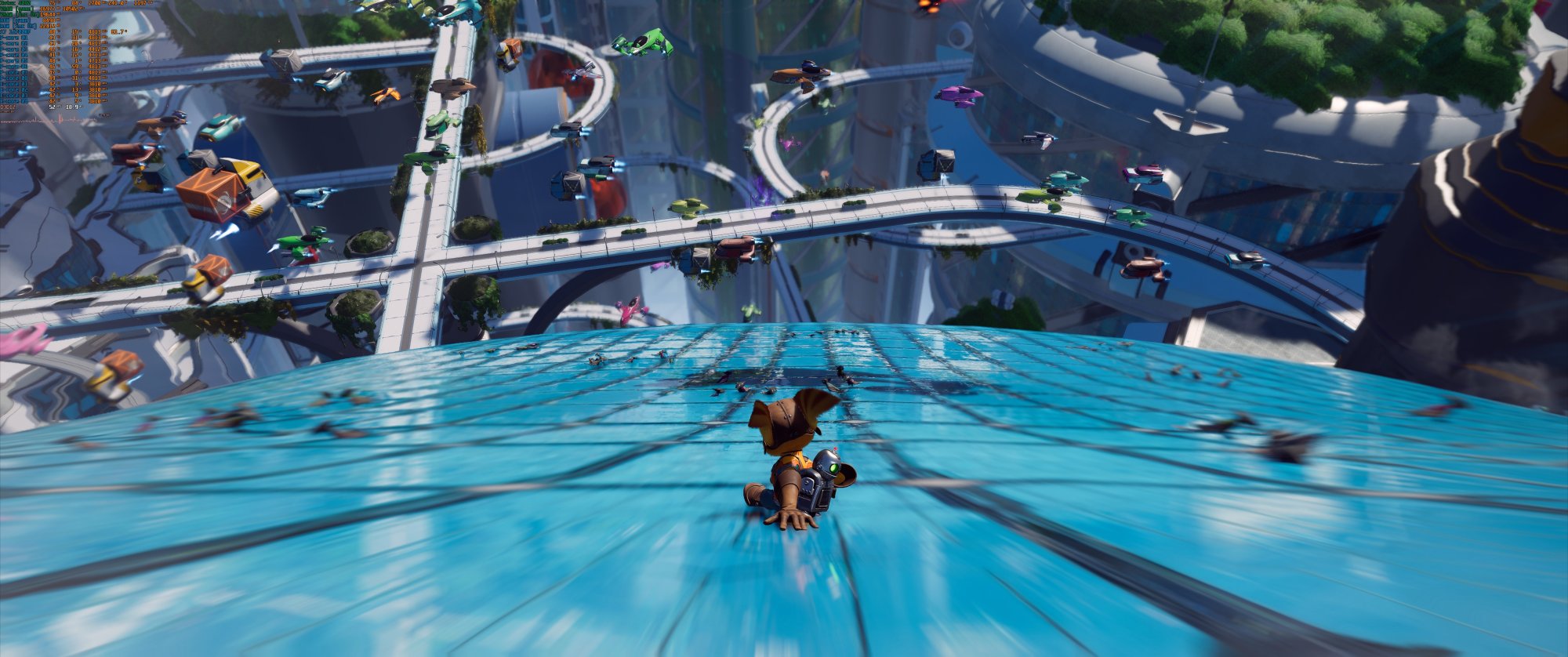

And lastly, this is the experience with Frame Gen enabled, whether it's at 3440x1440 or at DLDSR 5160x2160 doesn't seem to matter, the framerate just drops of there are consistent frametime stutters as below:

View attachment 585753

Oh I also got a crash to desktop at the start of the game too, which can be seen at the end of this clip:

Other than that, looks/great though and mouse and keyboard with the mechanics works well. There's no Raw input setting though and I did have to drop the x and y sensitivity to 4 from the default 5 as on the XM2we I found it too sensitive at 1000CPI and 1000Hz which is my 24/7 default everything.

Now, time to watch the DF review to see if what they found matches what I found.

The forum compressed your images, so to me it looked like the top one said 91C.The settings screenshot? The GPU does not run hot, it runs at normal temps and there has never been any issues with the card running hot in any game. I can path trace Cyberpunk all day long without a single issue.

But if you mean the first in-game screenshot shown then that's at 78 degrees core temp, which is normal.

Yeah overall it's a solid job, few technical hitches but otherwise seems all fine if you take heed of the quirks noted earlier and those by digital foundry!

I did a full video now too as had to restart the game due to the progress-breaking bug that has carried over from the PS5 (don't leave the club to explore when you have to follow the Phantom, the doors will close and you're stuffed unless you created a manual save point before the event).

This is a start to crash site with no other interruptions, no crashes etc. You can see various framerate drops here and there and it ranges from as low as 31fps at one time to over 100fps others. CPU/GPU utilisation remains whacky.

Ah bummer I bet it's YouTube taking eons given the length of the video!Looks like the video is still processing because I'm only getting 320p in the quality option.

Last time I uploaded a 4K video it took nearly an hour to process an 8-minute video. Looks like all the resolution options are there now.Ah bummer I bet it's YouTube taking eons given the length of the video!