Bankie

2[H]4U

- Joined

- Jul 27, 2004

- Messages

- 2,472

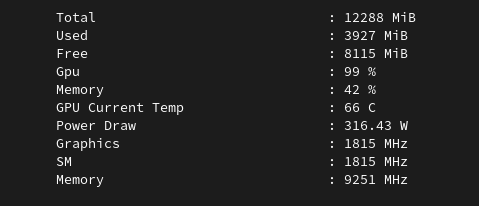

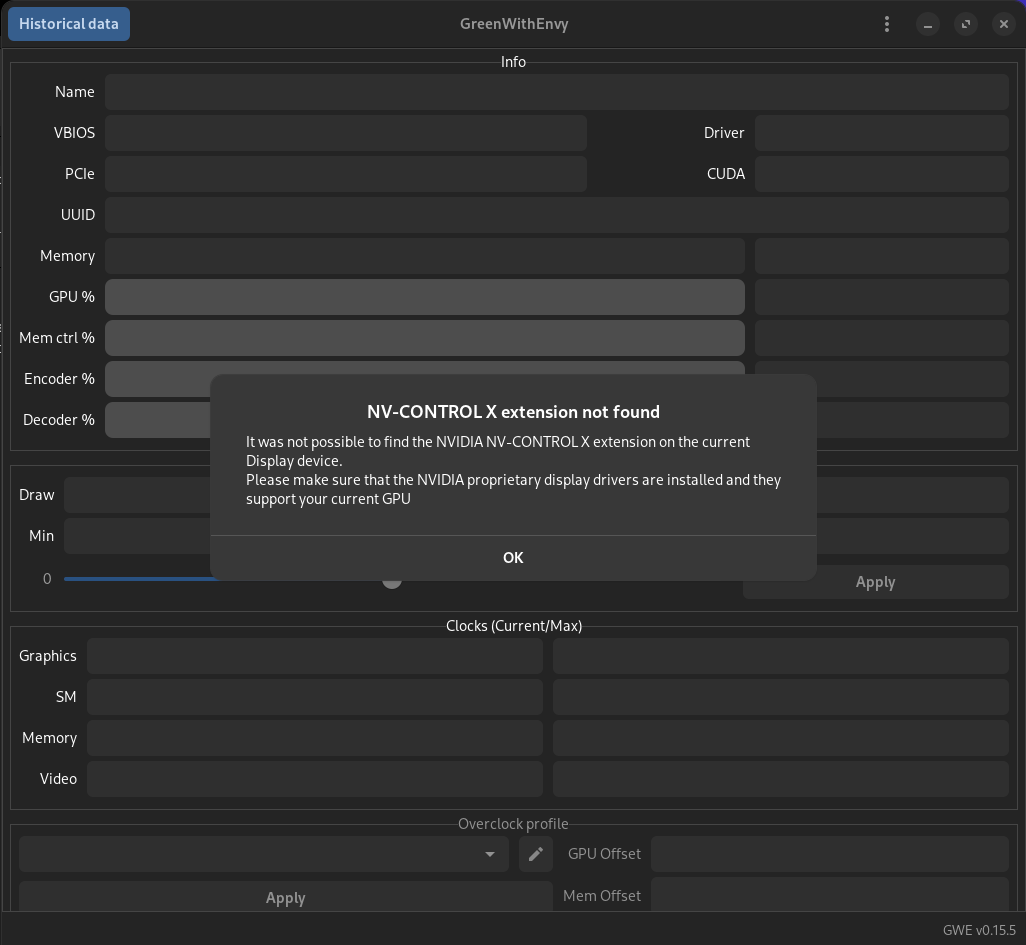

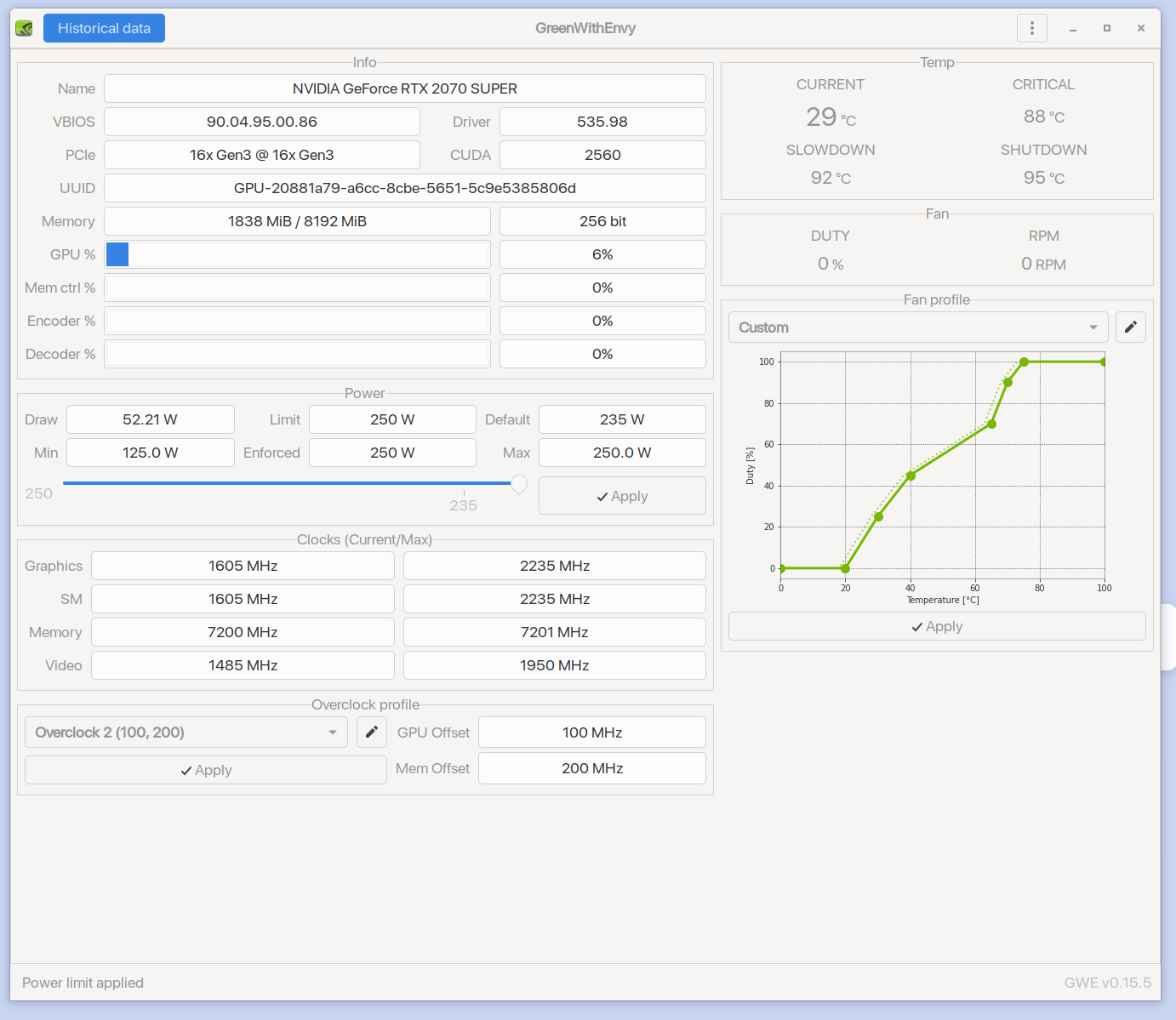

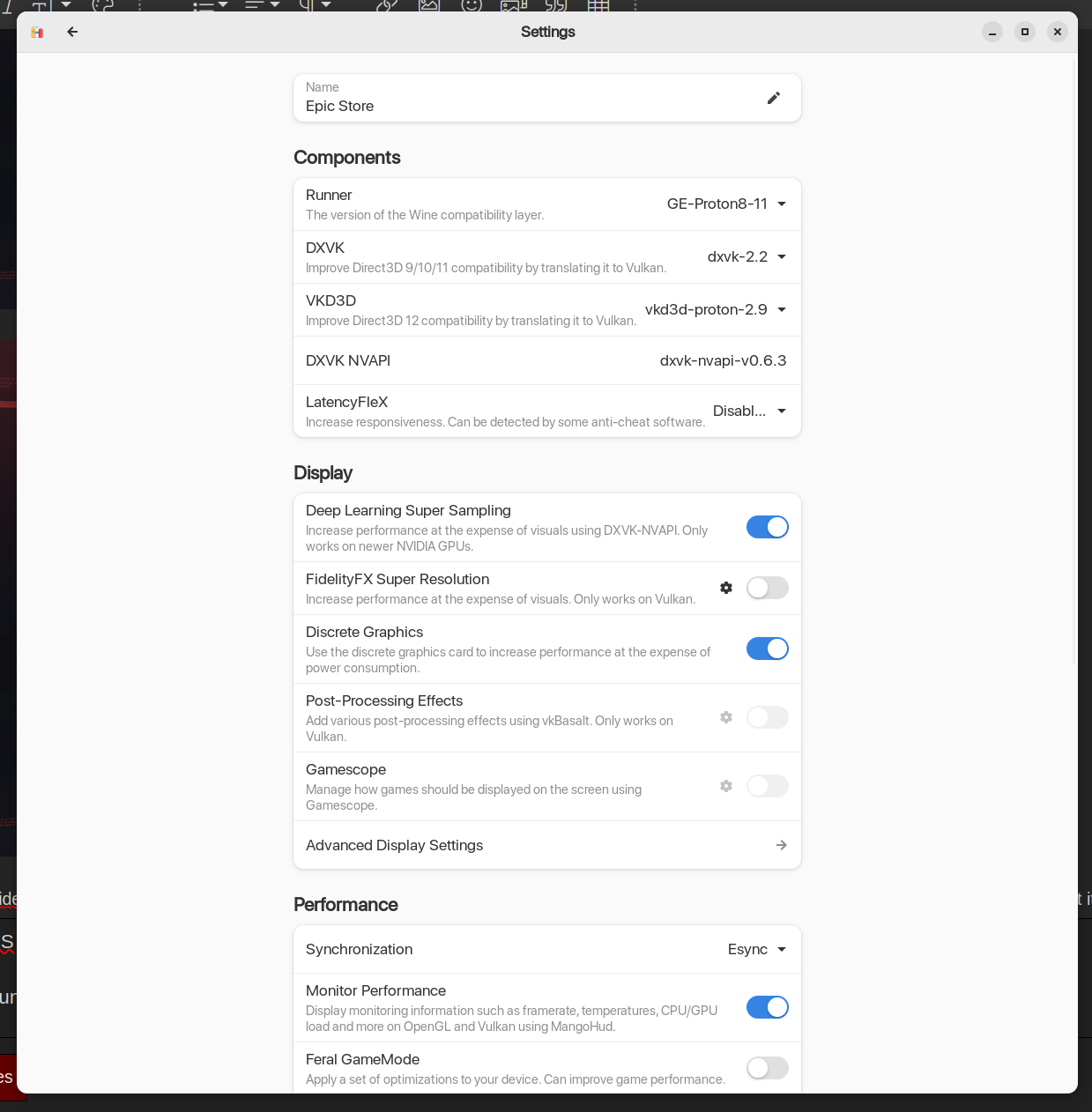

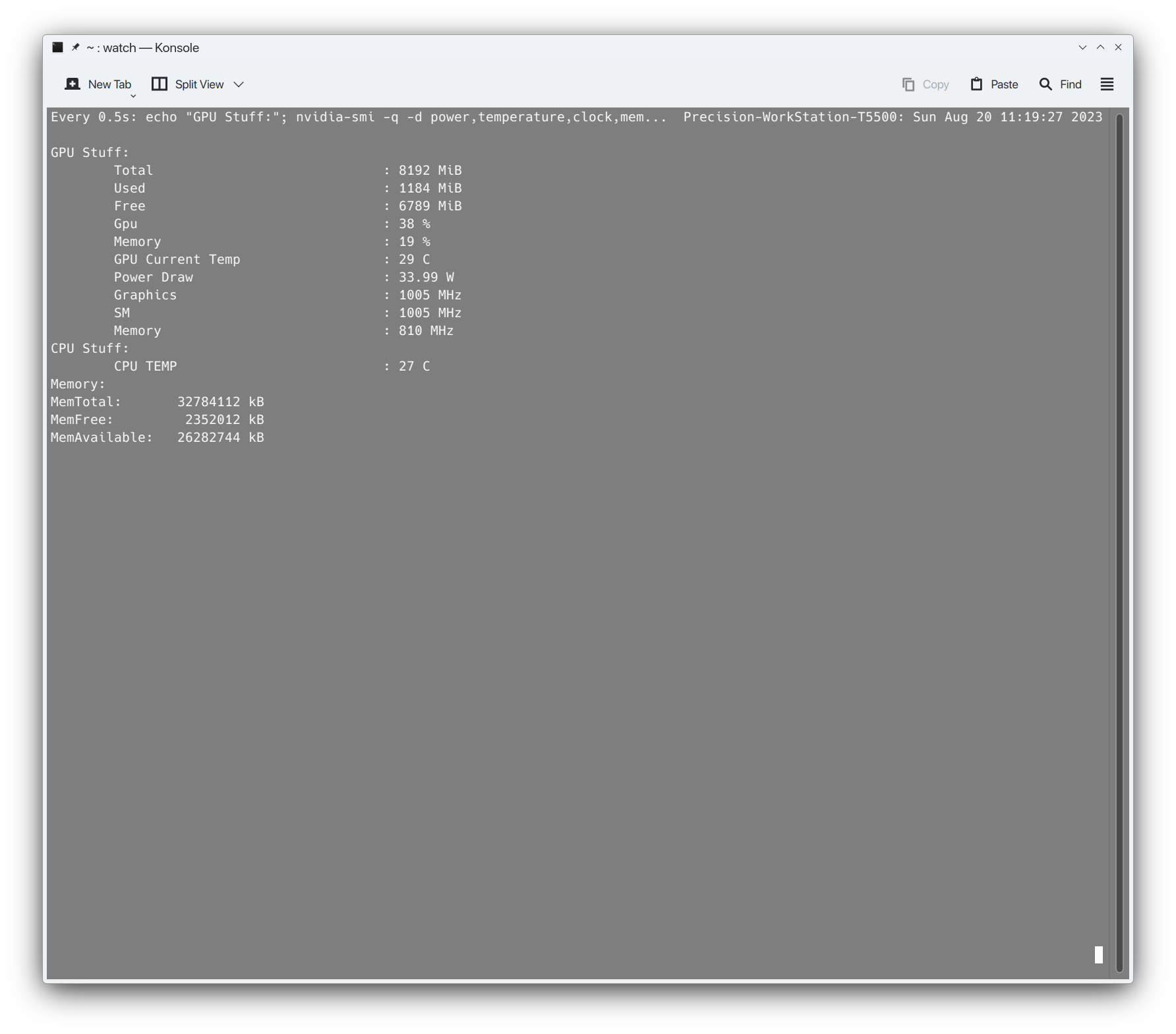

There's one called 'Greenwithenvy' or somesuch that will allow you to do it.What is commonly used to under/overclock on Linux? I need to lower the power limit on this 3080 Ti quite badly.

https://gitlab.com/leinardi/gwe

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)