Cirkustanz

Limp Gawd

- Joined

- Sep 26, 2007

- Messages

- 501

So, I have two displays hooked up to my computer lately. One is a monitor, another is a television. I only use the television for when I am playing a game. The tv is in front of my couch. The monitor is by my desk. (Tv is in a corner of the living room)

My card is an asus rog strix oc 4090. My monitor is connected to one of the DP connections, and the television is connected to one of the two HDMI ports on the card.

Sometimes I go several days without playing games, and for *reasons* (I play an old MMO that if there are two displays connected, I lose the ability to use windowed gamma to change the brightness, so whenever I play the game I specifically disable the television) and really only enable the television as a display when I want to play a game on my couch.

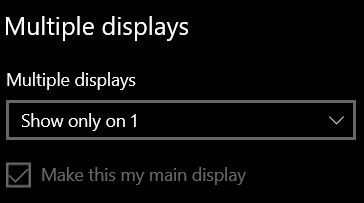

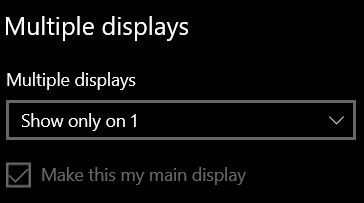

1 is the monitor (2560x1440) , 2 is the tv (a 4k) and it looks this way because the television is disabled. Whenever the television is enabled obviously the size comparison in windows display results in display 2 being much larger than 1)

1 is the monitor (2560x1440) , 2 is the tv (a 4k) and it looks this way because the television is disabled. Whenever the television is enabled obviously the size comparison in windows display results in display 2 being much larger than 1)

Whenever I want to play a game on the couch, I just change multiple displays to either have both enabled, or enable display 2 (the television) and also set it as main display.

Sounds good, right? Pretty simple?

Here's the stupid question part:

When I turn my computer off, I have always turned it off like in the first screenshot, with display 1 (monitor) being the only active display in windows. But even if the television is powered off (both when the PC is shut down and when I turn the PC back on) windows tries to use the television as the primary display. The UEFI shows on the monitor without any problem, but the second it gets to windows the monitor shuts off , and it stays this way...right up until I turn the television on. THEN I see windows on the monitor as I would expect to see. But if I don't enable the television in windows display, when my television eventually powers off from not receiving a signal, guess what happens...my monitor shuts off. I've tried changing the HDMI connector the television is plugged into, I've even tried plugging the HDMI port into an DP to HDMI adapter, and it still does it.

It's so freaking annoying! What am I doing wrong?

My card is an asus rog strix oc 4090. My monitor is connected to one of the DP connections, and the television is connected to one of the two HDMI ports on the card.

Sometimes I go several days without playing games, and for *reasons* (I play an old MMO that if there are two displays connected, I lose the ability to use windowed gamma to change the brightness, so whenever I play the game I specifically disable the television) and really only enable the television as a display when I want to play a game on my couch.

Whenever I want to play a game on the couch, I just change multiple displays to either have both enabled, or enable display 2 (the television) and also set it as main display.

Sounds good, right? Pretty simple?

Here's the stupid question part:

When I turn my computer off, I have always turned it off like in the first screenshot, with display 1 (monitor) being the only active display in windows. But even if the television is powered off (both when the PC is shut down and when I turn the PC back on) windows tries to use the television as the primary display. The UEFI shows on the monitor without any problem, but the second it gets to windows the monitor shuts off , and it stays this way...right up until I turn the television on. THEN I see windows on the monitor as I would expect to see. But if I don't enable the television in windows display, when my television eventually powers off from not receiving a signal, guess what happens...my monitor shuts off. I've tried changing the HDMI connector the television is plugged into, I've even tried plugging the HDMI port into an DP to HDMI adapter, and it still does it.

It's so freaking annoying! What am I doing wrong?

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)