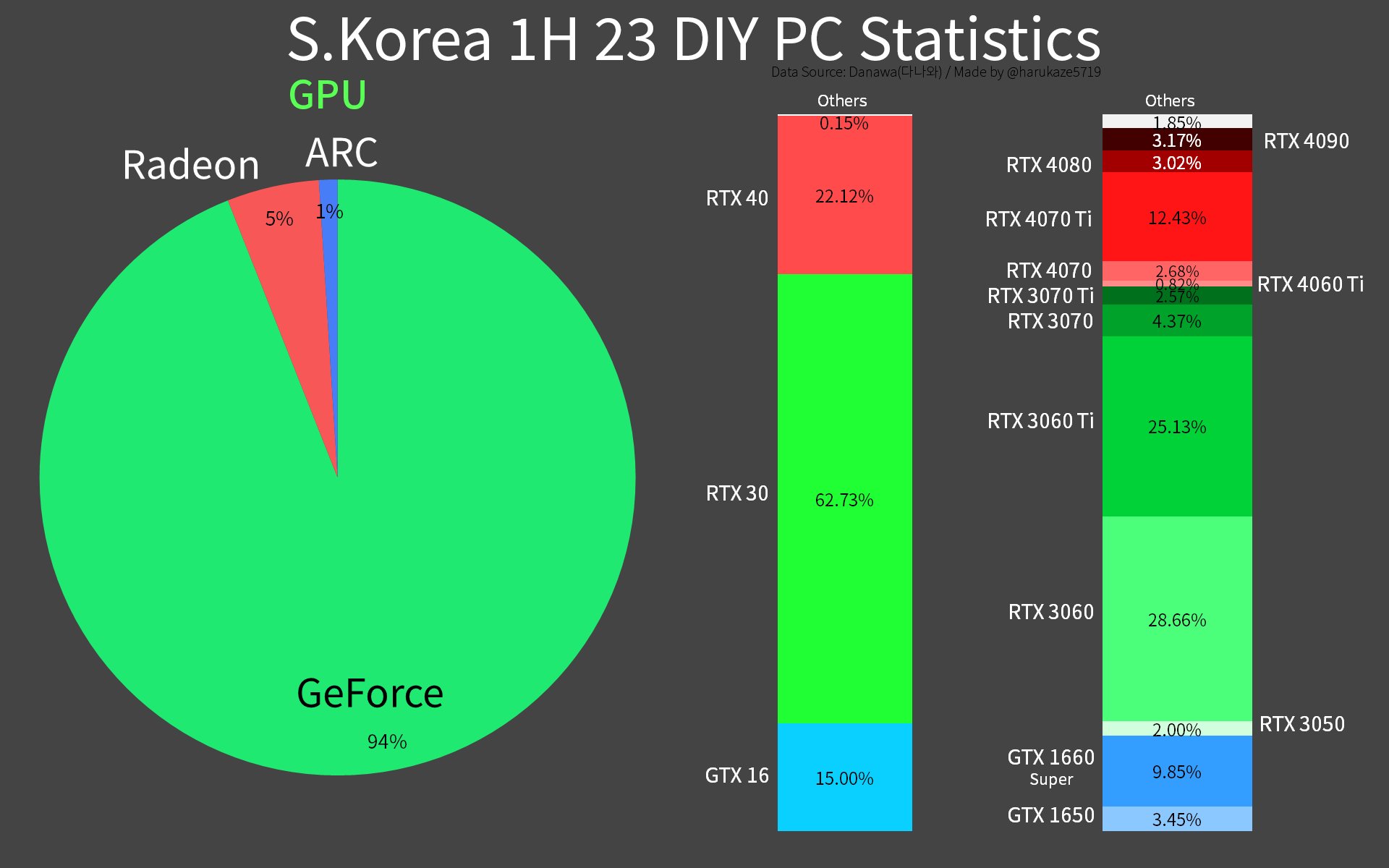

? how many people will have in their mind sales charts of all major market, China, Japan, Korea, Euros, Australia, North America,including pre-built PCThe steam results don’t match people’s day to day experience with their friends, online community or sales charts. This is why people look for answers to explain away the data.

1060 are 2016, 16xx card are from 2019It doesn’t make sense intuitively that the three most populare gaming cards would be 8 years old.

I count around 26.5% of the DX12 gpu market on the latest steam hardware survey, about the same than Ampere if we exclude mobile (23.58%).~40% of the market is using

I think has long that a 1050-1060 are good enough to play some of the most popular title in the world, they are simply sold and goes to a kid-3rd world country gamer happy to get them and do not get removed from the poll.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)