What the future holds for RDNA

If we take stock of what AMD has achieved with RDNA in four years, and appraise the overall success of the changes, the end result will fall somewhere between Bulldozer and Zen. The former was initially a near-catastrophic disaster for the company but redeemed itself over the years by being cheap to make. Zen, on the other hand, has been outstanding from the start and forced a seismic upheaval of the entire CPU market.Although it's too hard to judge precisely how good it's been for AMD, RDNA and its two revisions are clearly neither of those. Its market share in the discrete GPU sector has fluctuated a little during this time, sometimes gaining ground on Nvidia, and losing at other times, but generally, it has remained the same.

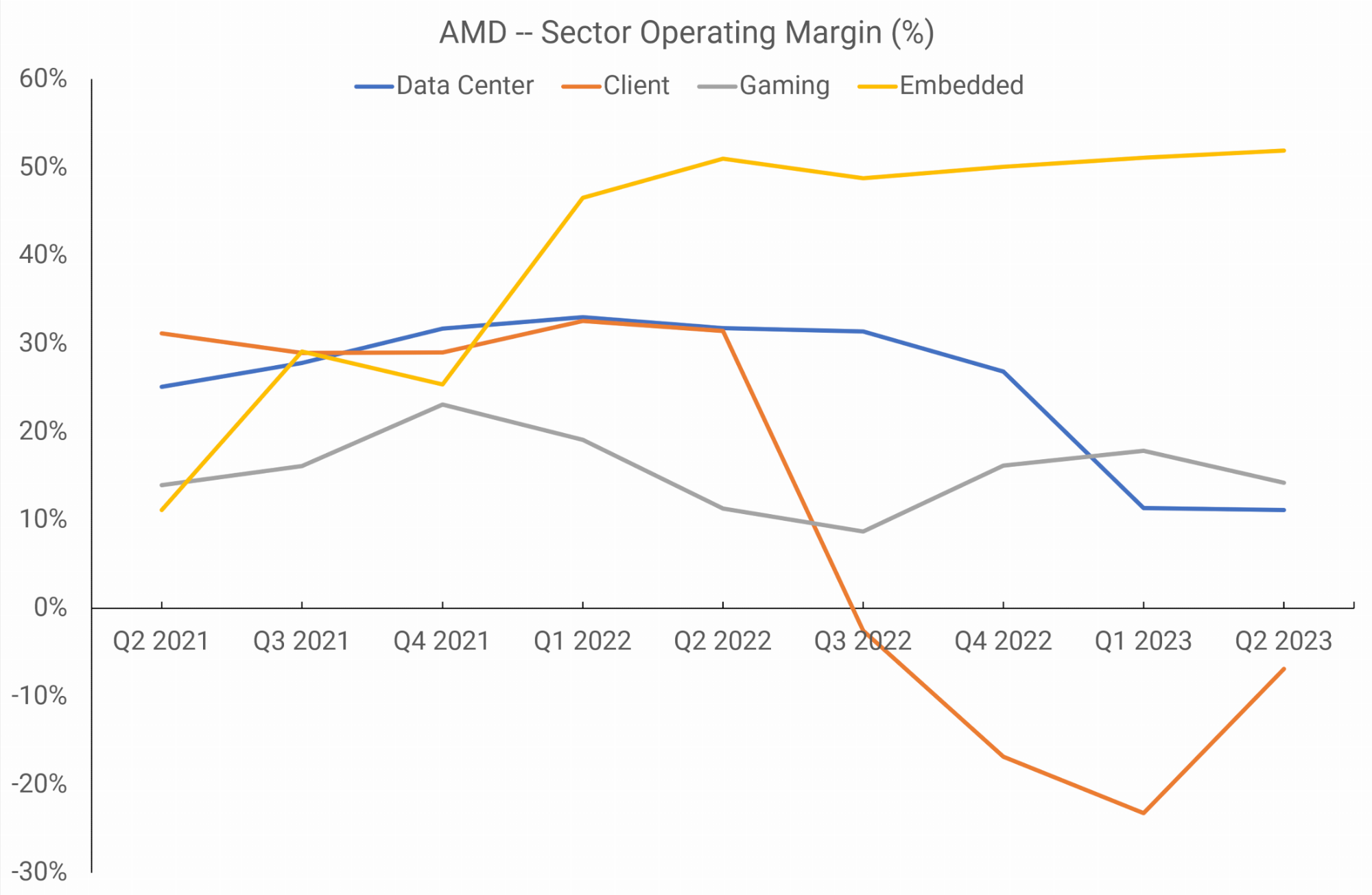

The Gaming division has made a small but steady profit since its inception, and although margins seem to be declining at the moment, there's no sign of impending doom. In fact, in terms of margins only, it's AMD's second-best sector!

And even if it wasn't, AMD makes more than enough cash from its embedded section (thanks to the purchase of Xilinx) to stave off any short periods of overall loss.

But where does AMD go from here?

AMD seems to have all of the engineering skills and know-how & they need to stay on the current course of minor architectural updates, continue to accrue small margins, and hold a narrow slice of the entire GPU market.

https://www.techspot.com/article/2741-four-years-of-amd-rdna/

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)