You can lower SA voltage/set it to static/set an offset.the 14th gen do have vccsa bug, it doesn't impact all cpus though.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Is it true that 7950X3D suffers from stutter on games?

- Thread starter sblantipodi

- Start date

Reading more, seems more an asus problem since 2 users I just saw don't have issue with tachyon or dark, just their apex.You can lower SA voltage/set it to static/set an offset.

CyberJunk

Supreme [H]ardness

- Joined

- Nov 13, 2005

- Messages

- 4,242

5800X3D is my favorite AMD CPU of all time. it's just "Great"

sblantipodi

2[H]4U

- Joined

- Aug 29, 2010

- Messages

- 3,765

I build PC since more than 30 years, I doubt that I lack skillsI understand your choice and it is a good one, the 7950X3D is a very nice processor that you can just plug and play. That's what made AMD jump up.

But to say the 13900k is crap because you can't stabilize its clock speed/heat and you can't stabilize its RAM is just a lack of skill. Yes, I know it's hard and time-consuming, but it's possible.

1X900k(s) are enthusiast-grade CPUs, so it is not just to plug and play.

Stabilizing Intel requires too much time and most of the time the stability is only apparent because some "others workloads" may fail.

Stabilizing for AVX2 requires the CPU to run at 250W at least and with that kind of power is nearly impossible to not throttle even with a good 360mm AIO (with a TAMB of 21°C) leave alone in regions where TAMB is higher.

Intel is just crap compared to AMD at this round and bashing AMD because it does not works well is simply stupid and surreal.

AMD Just Works! You can't tell the same for Intel

Of course it takes timeI build PC since more than 30 years, I doubt that I lack skills

Stabilizing Intel requires too much time and most of the time the stability is only apparent because some "others workloads" may fail.

Stabilizing for AVX2 requires the CPU to run at 250W at least and with that kind of power is nearly impossible to not throttle even with a good 360mm AIO (with a TAMB of 21°C) leave alone in regions where TAMB is higher.

Intel is just crap compared to AMD at this round and bashing AMD because it does not works well is simply stupid and surreal.

AMD Just Works! You can't tell the same for Intel

250w is too little, need more and it's all about which system will run 0.5-2% faster

I've also been using AMD for a long time and yes, I agree that nowadays AMD are closer to "just works" than Intel.

Tsumi

[H]F Junkie

- Joined

- Mar 18, 2010

- Messages

- 13,760

https://www.techspot.com/review/2821-amd-ryzen-7800x3d-7900x3d-7950x3d/

Techspot did an in-depth dive on the bastard child that is the 7900X3D, including testing out how a hypothetical 7600X3D would perform. In the context of this thread, my primary takeaway is that while some games can definitely take advantage of 8 cores, more than 8 cores definitely does not benefit any current game. 33% more cores gets you at best 15% more performance and more often less than that.

Techspot did an in-depth dive on the bastard child that is the 7900X3D, including testing out how a hypothetical 7600X3D would perform. In the context of this thread, my primary takeaway is that while some games can definitely take advantage of 8 cores, more than 8 cores definitely does not benefit any current game. 33% more cores gets you at best 15% more performance and more often less than that.

sblantipodi

2[H]4U

- Joined

- Aug 29, 2010

- Messages

- 3,765

https://www.techspot.com/review/2821-amd-ryzen-7800x3d-7900x3d-7950x3d/

Techspot did an in-depth dive on the bastard child that is the 7900X3D, including testing out how a hypothetical 7600X3D would perform. In the context of this thread, my primary takeaway is that while some games can definitely take advantage of 8 cores, more than 8 cores definitely does not benefit any current game. 33% more cores gets you at best 15% more performance and more often less than that.

that's not really true.

that article seems a bad cut and paste from this video:

View: https://www.youtube.com/watch?v=Gu12QOQiUUI

as you can see there are various games that benefits from more than 8 cores like Spiderman, Horizon Forbidden West, Hogwarts Legacy, Star Wars Jedi Survivors, Counter Strike ecc...

8 cores is becoming the new old and I think that it will not be sufficient in the next 2 or 3 years...

games like Horizon Forbidden West started to have ingame shader compilation (even pre-game compilation), add direct storage that will stress this situation even more...

Domingo

Fully [H]

- Joined

- Jul 30, 2004

- Messages

- 22,645

Aren't Techspot and Hardware Unboxed the same folks?

https://www.techspot.com/review/2821-amd-ryzen-7800x3d-7900x3d-7950x3d/

Techspot did an in-depth dive on the bastard child that is the 7900X3D, including testing out how a hypothetical 7600X3D would perform. In the context of this thread, my primary takeaway is that while some games can definitely take advantage of 8 cores, more than 8 cores definitely does not benefit any current game. 33% more cores gets you at best 15% more performance and more often less than that.

Um...Techspot and HUB are the same folks (Steve Walton), so the Techspot articles are basically the script for the videos on HUB.

And yeah, there are definitely games that benefit from more than 6 cores, but 6 x3d cores are still significantly faster than 8 non-x3d cores...ergo the 7900x3d and (fake) 7600x3d are faster than all of the non-x3d parts.

Tsumi

[H]F Junkie

- Joined

- Mar 18, 2010

- Messages

- 13,760

that's not really true.

that article seems a bad cut and paste from this video:

View: https://www.youtube.com/watch?v=Gu12QOQiUUI

as you can see there are various games that benefits from more than 8 cores like Spiderman, Horizon Forbidden West, Hogwarts Legacy, Star Wars Jedi Survivors, Counter Strike ecc...

8 cores is becoming the new old and I think that it will not be sufficient in the next 2 or 3 years...

games like Horizon Forbidden West started to have ingame shader compilation (even pre-game compilation), add direct storage that will stress this situation even more...

I don't use Youtube for tech reviews, much prefer the text format.

Hogwarts Legacy, Spiderman, and Jedi Survivors all have single digit gains from 7800X3D to 7950X3D based on the article. That can easily be chalked up to max boost core speed differences. In fact, looking at the 7700X vs 7950X numbers tell pretty much the same story. The only double-digit gains are with Counterstrike 2 but with FPS at 300-500, that hardly matters and percentage-wise it's still in the single digit range.

I would love to see you back up your statement with Horizon Forbidden West but I can't seem to find any reviews of CPU performance with my google-fu.

Wolverine2349

Weaksauce

- Joined

- May 30, 2016

- Messages

- 74

I build PC since more than 30 years, I doubt that I lack skills

Stabilizing Intel requires too much time and most of the time the stability is only apparent because some "others workloads" may fail.

Stabilizing for AVX2 requires the CPU to run at 250W at least and with that kind of power is nearly impossible to not throttle even with a good 360mm AIO (with a TAMB of 21°C) leave alone in regions where TAMB is higher.

Intel is just crap compared to AMD at this round and bashing AMD because it does not works well is simply stupid and surreal.

AMD Just Works! You can't tell the same for Intel

Bingo after trying so many 13th and 14th Gen CPUs, they have degradation and stability issues. You absolutely need a 2 DIMM board and please no Asus for faster than 6000 RAM and if even that.

AMD is more stable and better and uses much less power thus much less heat dumped into the case and easier for quiet system and air cooling.

Intel 10nm process at the high end is fragile and not good. Mid range and lower not as big deal because power and thermal headroom so much more as less cores and will not be noticed. But high end even at Intel stock power limits 253 PL1 and PL2 and 307W current limit degradation is still a thing and no one knows why or whole story.

As for the topic, dual CCDs have cross latency issues.

The single CCD AMD CPUs are best bet.

7800X3D works best as single CCD like no game benefits from more than 8 cores or marginally at best. And it is stable and uses so little power and little heat dumped into the case.

But if games start to scale to more cores please AMD or Intel we need a 10-12 core CPU on a single CCD/ring.

sblantipodi

2[H]4U

- Joined

- Aug 29, 2010

- Messages

- 3,765

Bingo after trying so many 13th and 14th Gen CPUs, they have degradation and stability issues. You absolutely need a 2 DIMM board and please no Asus for faster than 6000 RAM and if even that.

AMD is more stable and better and uses much less power thus much less heat dumped into the case and easier for quiet system and air cooling.

Intel 10nm process at the high end is fragile and not good. Mid range and lower not as big deal because power and thermal headroom so much more as less cores and will not be noticed. But high end even at Intel stock power limits 253 PL1 and PL2 and 307W current limit degradation is still a thing and no one knows why or whole story.

As for the topic, dual CCDs have cross latency issues.

The single CCD AMD CPUs are best bet.

7800X3D works best as single CCD like no game benefits from more than 8 cores or marginally at best. And it is stable and uses so little power and little heat dumped into the case.

But if games start to scale to more cores please AMD or Intel we need a 10-12 core CPU on a single CCD/ring.

I don't agree completely on this...

HwUnboxed made a good video where you can see that some games is starting to leverage more than 8 cores, giving some benefits to 7950X3D.

All in all 7950X3D is the best gaming CPU currently and the more "future proof" if money is not a problem.

View: https://youtu.be/Gu12QOQiUUI?si=FcR5KBN6JVd6uLPN

don't forget about Direct Storage and in game Shader Compiling that are more and more present in games,

fast assets loading + shader compilation will require more cores since that is a highly parallel workload.

Last edited:

Wolverine2349

Weaksauce

- Joined

- May 30, 2016

- Messages

- 74

I don't agree completely on this...

HwUnboxed made a good video where you can see that some games is starting to leverage more than 8 cores, giving some benefits to 7950X3D.

All in all 7950X3D is the best gaming CPU currently and the more "future proof" if money is not a problem.

View: https://youtu.be/Gu12QOQiUUI?si=FcR5KBN6JVd6uLPN

don't forget about Direct Storage and in game Shader Compiling that are more and more present in games,

fast assets loading + shader compilation will require more cores since that is a highly parallel workload.

Its got dual CCDs so cross CCD latency penalty which is not good in all situations and need to use stupid XBOX Game bar.. Why oh why is there no CPU with more than 8 P cores on a single ring/CCD?

Well there is the 10900K with 10 cores on a single ring, but that is old architecture Comet Lake which has significantly worse IPC.

Though games seem to marginally benefit form more than 8 cores at best, but as you said some are showing they do even if only a little.

Though money is of no object to me but not crazy about hybrid arch or dual CCD latency penalty so 7800X3D I went with. Otherwise would have gone with the 7900X3D or 7950X3D if they had 12 cores or 16 cores on same CCD.

Even would have gone Intel 13th or 14th Gen if they had more than 8 P cores and not those e-cores, though in the later case, Intel 13th and 14th Gen appear to have random stability and degradation and IMC inconsistency issues plus they use so much power and dump a lot of heat into the case. Though I want more than 8 non hybrid cores on single ring so badly I would be willing to take chances on that.

https://www.reddit.com/r/intel/comments/18eniws/whats_stopping_intel_from_making_a_10_pcore_cpu/

They say very few if any games have any benefit form more than 8 cores which is why Intel does not make one as one of reasons

Also degradation is very real on 13th and 14th Gen and no one seems to know what stock speeds are:

https://www.overclock.net/search/1432718/?q=degradation&t=post&c[thread]=1807439&o=relevance

View: https://www.youtube.com/watch?v=kQjMkr013Cw

If you google it there are stories of poor quality control and degradation and intel Raptor Lake crashing Unreal games

I have experienced it myself.

Google Zen 4 or Ryzen 7000 nothing found about degradation and its been out 1 1/2 years. This is despite on auto settings them running at 95C.

Just wish AMD had such a CPU with more than 8 cores on single CCD and it appears Zen 5 is not going to have more than 8 cores per CCD either.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,605

People say this a lot. But.....show me some data on some scenarios where that latency actually matters enough that it performs notably worse?--------because I haven't seen it.Its got dual CCDs so cross CCD latency penalty which is not good in all situations and need to use stupid XBOX Game bar.. Why oh why is there no CPU with more than 8 P cores on a single ring/CCD?

Its likely Intel WOULD have 10 P cores on their CPUs, if they didn't use so much power and generate so much heat. But, they haven't been able to fix that for 4 generations...

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,605

Also degradation is very real on 13th and 14th Gen and no one seems to know what stock speeds are:

https://www.overclock.net/search/1432718/?q=degradation&t=post&c[thread]=1807439&o=relevance

View: https://www.youtube.com/watch?v=kQjMkr013Cw

That link is about overclocking degredation. Which has always been a real thing. And AMD is affected by it, as well. We have AMD users in this forum whom have experienced it.

If you google it there are stories of poor quality control and degradation and intel Raptor Lake crashing Unreal games

There is very recent news of i7 an i9 possibly not being able to sustain their stock spec. And that is different from OC degradation. And definitely concerning.

III_Slyflyer_III

[H]ard|Gawd

- Joined

- Sep 17, 2019

- Messages

- 1,252

Heat maybe? I don't know, I would think vcache on both would be ideal and no need for software solutions past wanting to stay on a single CCD. Not sure how 7000 series does with cross CCD comms in general in games, but when I went with an all core OC on my 5950X, cross CCD in games does not cause any measurable micro stuttering anymore. I actually gained a massive (and very measurable) performance boost too.Here is a stupid question for everyone: Why can't AMD put the vcache on the other CCD as well?

Digital Viper-X-

[H]F Junkie

- Joined

- Dec 9, 2000

- Messages

- 15,116

I am not a hardware engineer, so this is a guess at best.

The design was meant to satisfy people that wanted the best of both worlds, a fast multi core cpu that has more than 8 cores, and can clock higher than the 7800x3d, and also people that want the best when it comes to gaming. So one chiplet to handle gaming scenarios, and then both chiplets for multicore work loads that can utilize more than 8 cores, which the non X3d chiplet being able to clock up to 5.6-7 ghz also on non cache intensive workloads. I would also assume that games that will use more than 8 cores will suffer from the latency of going across the chiplets, which would also negate the benefit of having the 3D Cache there.

Do we have games that can take advantage of more than 8 cores yet? (Maybe Star Citizen?)

The design was meant to satisfy people that wanted the best of both worlds, a fast multi core cpu that has more than 8 cores, and can clock higher than the 7800x3d, and also people that want the best when it comes to gaming. So one chiplet to handle gaming scenarios, and then both chiplets for multicore work loads that can utilize more than 8 cores, which the non X3d chiplet being able to clock up to 5.6-7 ghz also on non cache intensive workloads. I would also assume that games that will use more than 8 cores will suffer from the latency of going across the chiplets, which would also negate the benefit of having the 3D Cache there.

Do we have games that can take advantage of more than 8 cores yet? (Maybe Star Citizen?)

This was touched on earlier in the thread. Basically vcache can’t run at very high clock speeds so vcache on both CCDs limits clocks on both CCDs which would result in a significant performance loss in applications that don’t benefit from additional cache. The hybrid model is a way for AMD to limit some of that performance loss by allowing at least one of the CCDs to clock higher.Here is a stupid question for everyone: Why can't AMD put the vcache on the other CCD as well?

This was touched on earlier in the thread. Basically vcache can’t run at very high clock speeds so vcache on both CCDs limits clocks on both CCDs which would result in a significant performance loss in applications that don’t benefit from additional cache. The hybrid model is a way for AMD to limit some of that performance loss by allowing at least one of the CCDs to clock higher.

Didn't see it, thank you!!!!

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,605

Very expensive to make and to sell. Marginal benefit in vcache sensitive apps (primarily gaming).Here is a stupid question for everyone: Why can't AMD put the vcache on the other CCD as well?

This was touched on earlier in the thread. Basically vcache can’t run at very high clock speeds so vcache on both CCDs limits clocks on both CCDs which would result in a significant performance loss in applications that don’t benefit from additional cache. The hybrid model is a way for AMD to limit some of that performance loss by allowing at least one of the CCDs to clock higher.

This, it's a best of both worlds situation. Basically for clock sensitive apps, they start on the non vcache cores and spill to the lower clocked vcache cores only if needed (in which case the clock drop is much smaller than if they started the other way), while also providing large cache for apps (games) that get a bigger benefit from that. If they did both cores vcache, games wouldn't benefit as they rarely exceed 8 cores and most other apps would be slower. Plus it'd be more expensive to make. If there were no clock penalty for the vcache, then yeah, put it on both.

People need to stop saying "best of both worlds" it isn't. It's a compromise. a 7950x3d is still slower than a 7950x in most non-gaming scenarios. Best of both worlds means just that. BEST. It would have to be faster than a 7950x in non gaming workloads and faster than a 7800x3d in gaming workloads.This, it's a best of both worlds situation. Basically for clock sensitive apps, they start on the non vcache cores and spill to the lower clocked vcache cores only if needed (in which case the clock drop is much smaller than if they started the other way), while also providing large cache for apps (games) that get a bigger benefit from that. If they did both cores vcache, games wouldn't benefit as they rarely exceed 8 cores and most other apps would be slower. Plus it'd be more expensive to make. If there were no clock penalty for the vcache, then yeah, put it on both.

Wolverine2349

Weaksauce

- Joined

- May 30, 2016

- Messages

- 74

That link is about overclocking degradation. Which has always been a real thing. And AMD is affected by it, as well. We have AMD users in this forum whom have experienced it.

There is very recent news of i7 an i9 possibly not being able to sustain their stock spec. And that is different from OC degradation. And definitely concerning.

Yeah that link is about overclocking degradation, but it seems Intel is more affected than AMD. Have AMD Zen 4 users experienced it and if so as fast as Intel users. And Any Zen 3 or Zen 4 degradation from just curve optimizer? Or only sttaic OC and PBO and if so how fast. I have heard reports of Raptor Lake degradation in just a few hours at 300W power draw with 80C+ temps. Highly doubt that happens on any AMD chips.

And the bottom report is very concerning and shows why degradation is so fast at overclocked settings.

Have heard reports of people even running undervolt static vcore on Raptor Lake and just a few months of gaming on it it degraded.

I mean technically aren't these Raptor Lake CPUs kind of always extremely overclocked out of box even Intel limits enforced. I mean base clock is like only 3GHz yet they run at over 5GHz.

Where as with AMD base clock is 4.2 to 4.5GHz on Zen 4 CPUs X3D and regular variants and they only run 5GHz (X3D if even that) to only up to 5.6GHz which while over base spec is not near as far as Intel Raptor Lake if base spec clock speed has any meaning in this.

But in any case it just appears and have a gut feeling these Intel Raptor Lake chips degrade much faster than AMD overclocked or not and is concerning as you mentioned.

Wolverine2349

Weaksauce

- Joined

- May 30, 2016

- Messages

- 74

People say this a lot. But.....show me some data on some scenarios where that latency actually matters enough that it performs notably worse?--------because I haven't seen it.

Its likely Intel WOULD have 10 P cores on their CPUs, if they didn't use so much power and generate so much heat. But, they haven't been able to fix that for 4 generations...

1% and 0.1 lows tank because of it.

I mean look at benchmarks and see how 7700X outperformed 7900X and 7950X because of dual CCD issues in early Zen 4 benchmarks

Intel could have 10-12 P cores no problem as 1 P core is similar in power to 4 e-core cluster. Though their 10nm node is absolute crap and fragile and degrades too easily and has such high power consumption it dumps way too much excess heat into case and too many quality control issues that I may not get it anyways after some experience.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,605

Regarding this: "Intel could have 10-12 P cores no problem as 1 P core is similar in power to 4 e-core cluster"1% and 0.1 lows tank because of it.

I mean look at benchmarks and see how 7700X outperformed 7900X and 7950X because of dual CCD issues in early Zen 4 benchmarks

Intel could have 10-12 P cores no problem as 1 P core is similar in power to 4 e-core cluster. Though their 10nm node is absolute crap and fragile and degrades too easily and has such high power consumption it dumps way too much excess heat into case and too many quality control issues that I may not get it anyways after some experience.

.....well, no. Its not that simple. Run your 12th/13th/14th gen CPU through blender or cinibench and record the temps.

Now disable the E-Cores and run the tests again. The temps will be very similar, if not the same.

I don't think cooling 10 P-cores would be realistic. And certainly not 12.

Regarding dual CCD CPUs being worse for gaming: I said show me the data

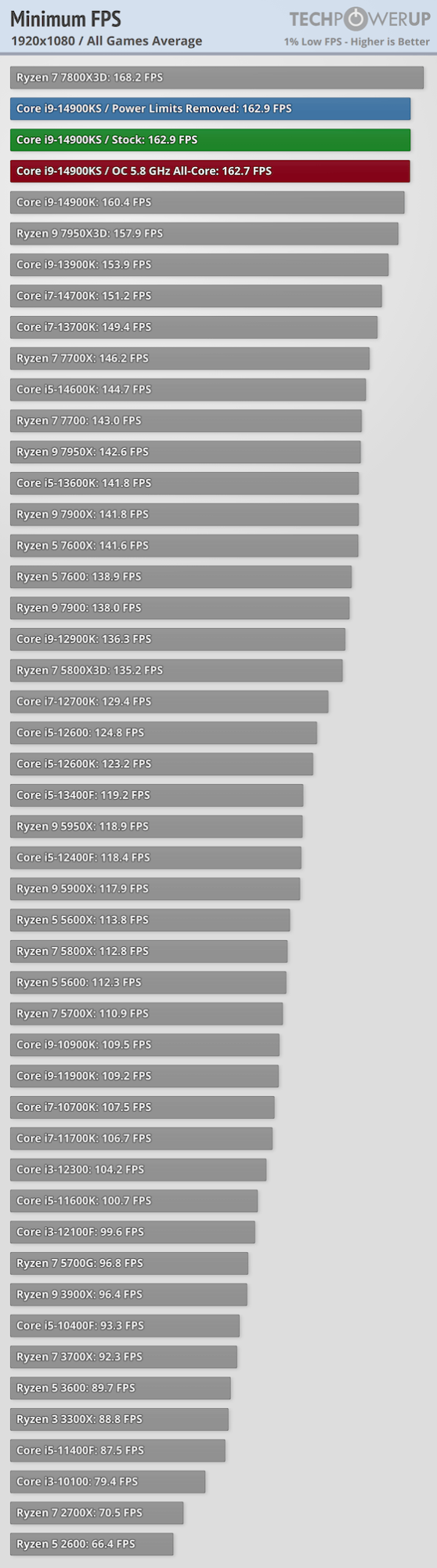

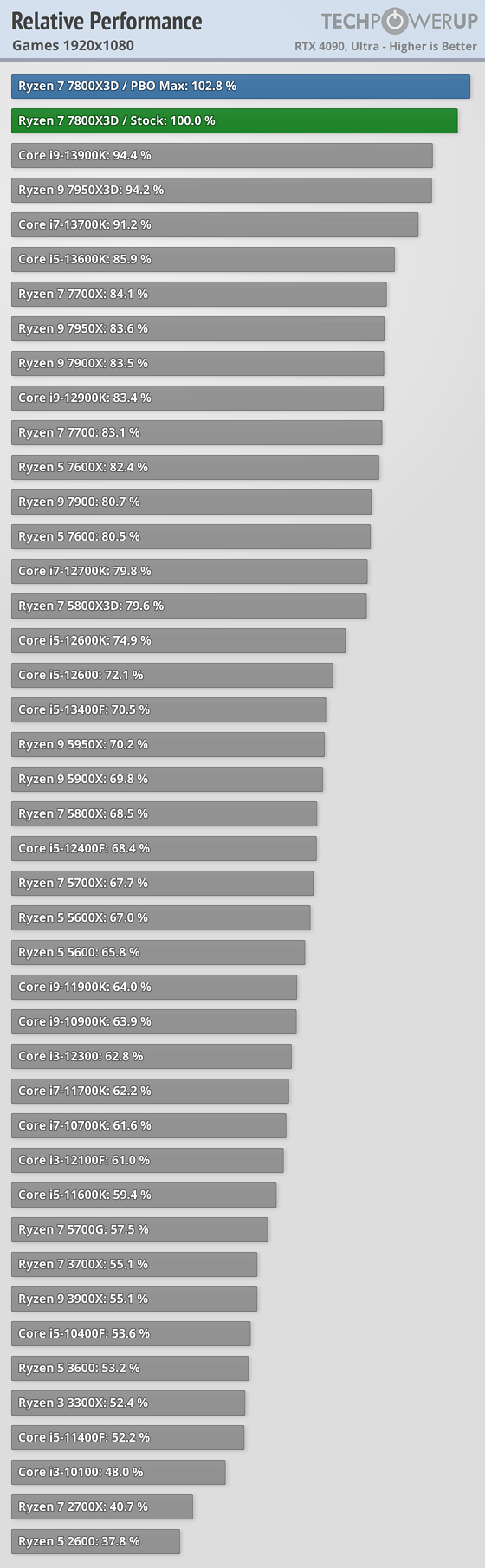

Here is Techpowerup's 1% lows page, from their 14900ks review

https://www.techpowerup.com/review/intel-core-i9-14900ks/21.html

click the toggle, to see individual games. Its only the super high framerate games which have over a 10 fps difference at 1080p. And at that those frames point....it doesn't matter for 99% of gamers.

Some other games were closer, within a handful of frames, or less. And then there are games where the dual CCD chips are faster.

And in the all games average, 7950x is only 3.6 frames slower than 7700x

its a similar story in Hardware Unboxed's Intel 14th gen review. However, they do have the interesting result of Plague Tale: Requiem; which seems to be the only real instance I have seen, where the dual CCD CPUs take a fair hit in a game which isn't geared for high frames/low latency. And its been repeated in some of their other videos. and they said they did multiple test runs and even clean wipes and Windows reinstalls, to try and 'fix' it. Buuuuuut, we still are only talking about 10 frames difference. A little more noticeable at these "lower" framerates. But, still nothing shattering. The dual CCD chips are great gaming chips.

View: https://youtu.be/0oALfgsyOg4?si=40efnkWLnAT8ZJrU

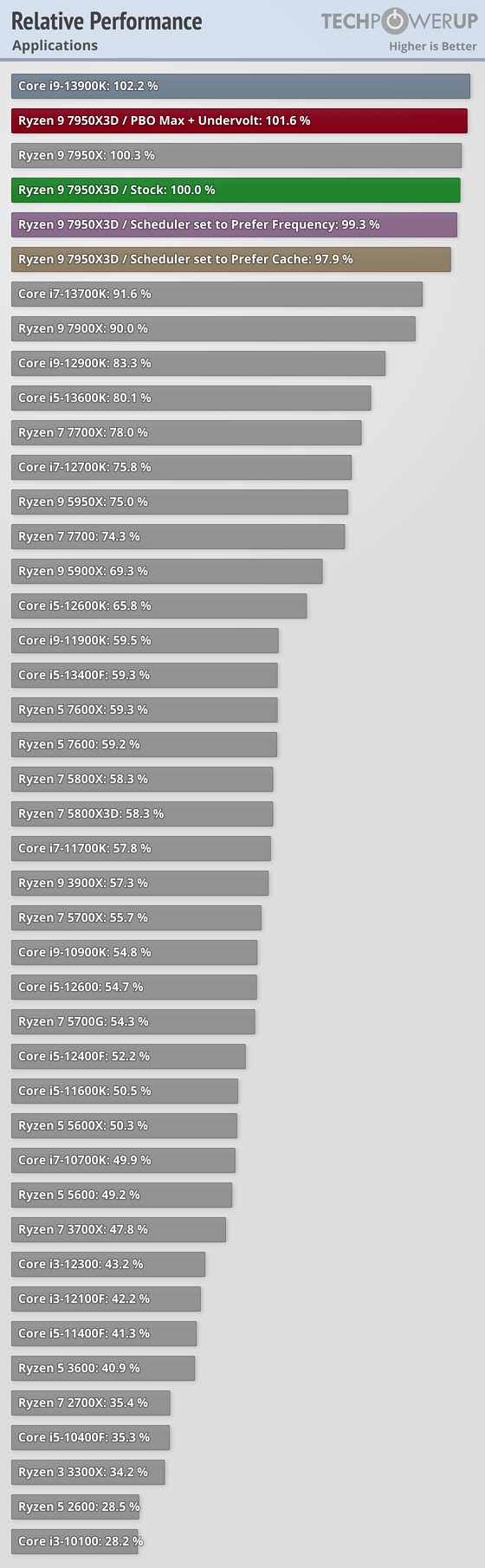

In Techpowerup's 7950x3d review, which is titled "best of bothering worlds":People need to stop saying "best of both worlds" it isn't. It's a compromise. a 7950x3d is still slower than a 7950x in most non-gaming scenarios. Best of both worlds means just that. BEST. It would have to be faster than a 7950x in non gaming workloads and faster than a 7800x3d in gaming workloads.

They have it 0.3% worse overall, in application performance.

And in the 7800x3d review, about 6% worse in gaming at 1080p. Which isn't nothing, but could also be less, now that improved chipset drivers and Xbox Gamebar updates are out, for scheduling issues.

Last edited:

Wolverine2349

Weaksauce

- Joined

- May 30, 2016

- Messages

- 74

Regarding this: "Intel could have 10-12 P cores no problem as 1 P core is similar in power to 4 e-core cluster"

.....well, no. Its not that simple. Run your 12th/13th/14th gen CPU through blender or cinibench and record the temps.

Now disable the E-Cores and run the tests again. The temps will be very similar, if not the same.

I don't think cooling 10 P-cores would be realistic. And certainly not 12.

Thats only because the 8 P cores without the e-cores clock higher because of more thermal headroom. 12 P cores all would clock lower to compensate in all core workloads and have similar power draw. Its like all cores on 7950X clock lower than all cores on 7900X because there are more of them. Same with 5950X vs 5900X.

it is that simple. In single core workloads or workloads only using a few they could clock just as high. 4 e-cores is like 1 P core but they get more multi threaded performance per area for perfect parallel workloads even though a 10-12 P core solution would be better for gamers.

Last edited:

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,605

Well again, no. You see the same behavior with a 13600k, which does 5.1 max, whether its 1 P core, all cores, or 6 P cores. You still see similar temps between all cores and no E cores, on a 13600k. And that's only 6 E cores.Thats only because the 8 P cores without the e-cores clock higher because of more thermal headroom.

I know the 13900k and 14900k have Thermal Velocity Boost, which would probably kick in more often, without active E cores. But the temp behavior exists with a 13600k. I've had both 12700k and 13600k.

Correct. But, I think they would probably have to clock way down with 10 and 12 cores, to keep the temps in check. It would still be better for highly threaded workloads. Such that, its probably more manageable overall, to do 8 E-cores.12 P cores all would clock lower to compensate in all core workloads and have similar power draw. Its like all cores on 7950X clock lower than all cores on 7900X because there are more of them. Same with 5950X vs 5900X.

At least at this point in time, there really aren't any games which truly seem to benefit a lot from more than 8 cores. Architectural differences seem more important for games, than core count. And then clockspeed for each arch. 14600k keeps on the heels of 13700k in most games. AMD's non vcache parts all perform similarly, except in a few titles where 6 isn't quite enough. or where clockspeed gives a larger than typical advantage. But that Vcache trumps core count and clockspeed.

Last edited:

Tsumi

[H]F Junkie

- Joined

- Mar 18, 2010

- Messages

- 13,760

It would be a very expensive CPU few people would buy. There's no performance benefit to having 16 V-cache cores. In fact, it would be a performance penalty in productivity apps.Here is a stupid question for everyone: Why can't AMD put the vcache on the other CCD as well?

Almost none, and the games that do benefit from additional cores only benefit marginally. Hardware Unboxed/Techspot did a comprehensive review that is linked above (you can also check out the 7900X3D thread). They even went so far as testing a hypothetical 7600X3D (6-core vcache) by disabling the non-vcache CCD on the 7900X3D. Here are the highlights:I am not a hardware engineer, so this is a guess at best.

The design was meant to satisfy people that wanted the best of both worlds, a fast multi core cpu that has more than 8 cores, and can clock higher than the 7800x3d, and also people that want the best when it comes to gaming. So one chiplet to handle gaming scenarios, and then both chiplets for multicore work loads that can utilize more than 8 cores, which the non X3d chiplet being able to clock up to 5.6-7 ghz also on non cache intensive workloads. I would also assume that games that will use more than 8 cores will suffer from the latency of going across the chiplets, which would also negate the benefit of having the 3D Cache there.

Do we have games that can take advantage of more than 8 cores yet? (Maybe Star Citizen?)

1. Comparing the hypothetical 7600X3D to 7800X3D, the largest performance difference was about 15%. 33% more cores nets at most a 15% performance boost and often less than that.

2. Out of the 12 game test, only 4 was faster on the 7900X3D as compared to the 7800X3D. With the exception of CoD MW2 at 7%, it only does better in those games by less than 5%. The remaining games it loses, sometimes by up to 15%, barely doing better than a 7600X3D.

3. The 7800X3D and 7950X3D trade blows for the top spot but are always less than 10% apart.

4. All of the X3D parts are faster for gaming than their non-X3D counterparts by about 15% on average.

8 vcache cores are basically the most games can take advantage of at the moment. There might be some advantage with 10 vcache cores but the scaling won't be linear since we already don't see linear scaling going 6 to 8.

Wolverine2349

Weaksauce

- Joined

- May 30, 2016

- Messages

- 74

Almost none, and the games that do benefit from additional cores only benefit marginally. Hardware Unboxed/Techspot did a comprehensive review that is linked above (you can also check out the 7900X3D thread). They even went so far as testing a hypothetical 7600X3D (6-core vcache) by disabling the non-vcache CCD on the 7900X3D. Here are the highlights:

1. Comparing the hypothetical 7600X3D to 7800X3D, the largest performance difference was about 15%. 33% more cores nets at most a 15% performance boost and often less than that.

2. Out of the 12 game test, only 4 was faster on the 7900X3D as compared to the 7800X3D. With the exception of CoD MW2 at 7%, it only does better in those games by less than 5%. The remaining games it loses, sometimes by up to 15%, barely doing better than a 7600X3D.

3. The 7800X3D and 7950X3D trade blows for the top spot but are always less than 10% apart.

4. All of the X3D parts are faster for gaming than their non-X3D counterparts by about 15% on average.

8 vcache cores are basically the most games can take advantage of at the moment. There might be some advantage with 10 vcache cores but the scaling won't be linear since we already don't see linear scaling going 6 to 8.

Which games marginally benefit form more than 8 cores? Would it be Cyberpunk Spiderman Remastered and its addons like Miles Morales and The Last of Us Part 1 and Starfield and Dragons Dogma 2 be such examples of games that may marginally benefit form more than 8 cores?

Dude literally said where to find this info in the 2nd sentence of the post you quoted.Which games marginally benefit form more than 8 cores? Would it be Cyberpunk Spiderman Remastered and its addons like Miles Morales and The Last of Us Part 1 and Starfield and Dragons Dogma 2 be such examples of games that may marginally benefit form more than 8 cores?

E-core generate more heat by die mm no ?Regarding this: "Intel could have 10-12 P cores no problem as 1 P core is similar in power to 4 e-core cluster"

.....well, no. Its not that simple. Run your 12th/13th/14th gen CPU through blender or cinibench and record the temps.

Now disable the E-Cores and run the tests again. The temps will be very similar, if not the same.

I don't think cooling 10 P-cores would be realistic. And certainly not 12.

In your example, is it not just because in both scenario, they max out on their temperature anyway and you could be doign the same with more p-core and have them run in a more efficiant window power wise ?

Because of the arch change between the > 8core cpu with AMD and other difference I am not sure if using benchmark tell which game benefit from more than 8 core or more but we can find which does on AMD (say maybe they take advantage of the 9-10 core but not enough to make up for it mean in term of using 2 chiplets, lower freq, etc... do they take advantage of having more core or a larger amount of cache, 7900x double the L3):Which games marginally benefit form more than 8 cores? Would it be Cyberpunk Spiderman Remastered and its addons like Miles Morales and The Last of Us Part 1 and Starfield and Dragons Dogma 2 be such examples of games that may marginally benefit form more than 8 cores?

https://www.techspot.com/review/2821-amd-ryzen-7800x3d-7900x3d-7950x3d/

Spider man seem to be one, not cyberpunk, Hogwart-Call of Duty

The main benefit from having >8 cores for gaming is if you do some form of multi-tasking while gaming. I play Warzone often, both my i7 9700k (8 cores) and R9 5900x (12 cores) can run it fine playing solo. However, when I squad up and playing quads while on a Skype video call, there's a significant performance loss on the 9700k, 5900x doesn't care. This is one of the reasons I personally don't subscribe to "you don't need more than 8 cores for gaming" I do quite a lot with my PC and I personally will benefit from >8 while gaming even if the game, strictly speaking, may not.

This is obviously anecdotal. 7800x3d is far more powerful than a 9700k. I'm CPU limited in WZ on the 9700k even with nothing else going on. It also doesn't have SMT "hyperthreading" to help it out like the 7800x3d does

This is obviously anecdotal. 7800x3d is far more powerful than a 9700k. I'm CPU limited in WZ on the 9700k even with nothing else going on. It also doesn't have SMT "hyperthreading" to help it out like the 7800x3d does

Wolverine2349

Weaksauce

- Joined

- May 30, 2016

- Messages

- 74

Because of the arch change between the > 8core cpu with AMD and other difference I am not sure if using benchmark tell which game benefit from more than 8 core or more but we can find which does on AMD (say maybe they take advantage of the 9-10 core but not enough to make up for it mean in term of using 2 chiplets, lower freq, etc... do they take advantage of having more core or a larger amount of cache, 7900x double the L3):

https://www.techspot.com/review/2821-amd-ryzen-7800x3d-7900x3d-7950x3d/

Spider man seem to be one, not cyberpunk, Hogwart-Call of Duty

Is there really advantage of more cache on 7900X vs 7700X mean anything when the only reason the 7900X has more cache is because it has 2 CCDs vs one but each has same amount of cache. I mean any advantage of more cache only because of an extra CCD?

Tsumi

[H]F Junkie

- Joined

- Mar 18, 2010

- Messages

- 13,760

Unique case, but also says you don't need two vcache CCDs. One vcache ccd for gaming and one regular CCD to take care of background tasks is sufficient.The main benefit from having >8 cores for gaming is if you do some form of multi-tasking while gaming. I play Warzone often, both my i7 9700k (8 cores) and R9 5900x (12 cores) can run it fine playing solo. However, when I squad up and playing quads while on a Skype video call, there's a significant performance loss on the 9700k, 5900x doesn't care. This is one of the reasons I personally don't subscribe to "you don't need more than 8 cores for gaming" I do quite a lot with my PC and I personally will benefit from >8 while gaming even if the game, strictly speaking, may not.

This is obviously anecdotal. 7800x3d is far more powerful than a 9700k. I'm CPU limited in WZ on the 9700k even with nothing else going on. It also doesn't have SMT "hyperthreading" to help it out like the 7800x3d does

I'm also assuming you have hardware acceleration for Skype disabled as that will shift the load from GPU to CPU.

The precise benefit of going AMD. Platform longevity.I will be upgrading from my 13900ks to the upcoming Ryzen 9000 series. Hopefully a 9950x3d...I already have a motherboard for it assembled with a case and a PSU. Will just transfer my RAM and GPU from my current system and I am off to the races.

Hardware acceleration is whatever the default setting is which I’m almost certain is enabled. Still uses cpu cycles, not really a problem on modern CPUs but older 8 cores with no SMT that are already pegged, you notice it.Unique case, but also says you don't need two vcache CCDs. One vcache ccd for gaming and one regular CCD to take care of background tasks is sufficient.

I'm also assuming you have hardware acceleration for Skype disabled as that will shift the load from GPU to CPU.

As far as CCDs, I don’t really want two CCDs at all. I’d like one 16 core ccd.

The Infinity Fabric can't handle it, the transfer would be really huge if it needed to transfer AND the cache from the 3D cache through it for the transferred thread.Here is a stupid question for everyone: Why can't AMD put the vcache on the other CCD as well?

Maybe next gen if there are at all CPUs like the current 79X0x3D (CCD+3Dcash CCD).

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)