erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 11,099

This is actually commendable and legit imo

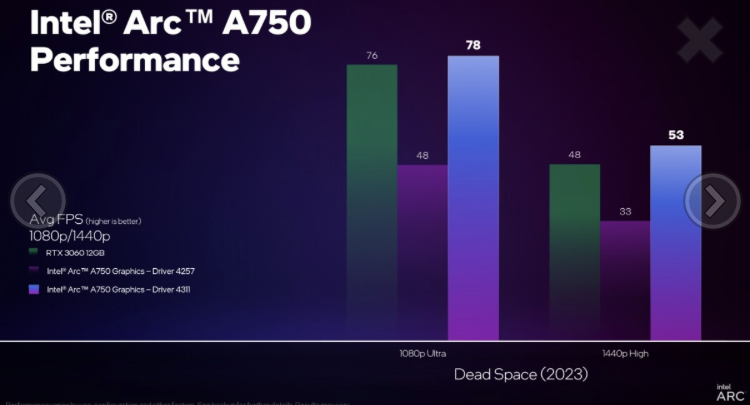

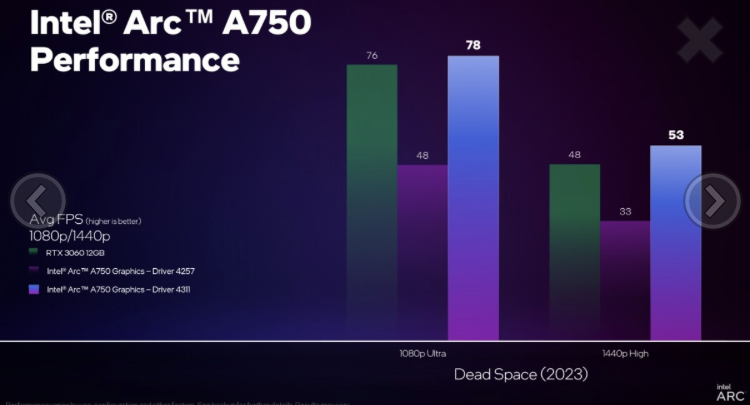

“According to Intel's own slides, the latest driver update brought decent performance improvements in several games, but more importantly, it is enough to give the Intel Arc A750 an edge, pushing it ahead of the RTX 3060 12 GB graphics card, at least in Dead Space Remake game. Although Intel has listed a bit of a higher price for the RTX 3060 12 GB, Arc A750 still offers higher performance per dollar, at least in some games. Of course, NVIDIA always has the RT and DLSS aces up its sleeve.

The Intel Arc A750 was pretty high on the performance per dollar in our review back in October last year, even when it was priced at $290, and with the recent driver updates, it is an even better choice.”

Source: https://www.techpowerup.com/307325/...h-rtx-3060-with-latest-driver-update#comments

“According to Intel's own slides, the latest driver update brought decent performance improvements in several games, but more importantly, it is enough to give the Intel Arc A750 an edge, pushing it ahead of the RTX 3060 12 GB graphics card, at least in Dead Space Remake game. Although Intel has listed a bit of a higher price for the RTX 3060 12 GB, Arc A750 still offers higher performance per dollar, at least in some games. Of course, NVIDIA always has the RT and DLSS aces up its sleeve.

The Intel Arc A750 was pretty high on the performance per dollar in our review back in October last year, even when it was priced at $290, and with the recent driver updates, it is an even better choice.”

Source: https://www.techpowerup.com/307325/...h-rtx-3060-with-latest-driver-update#comments

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)