Hey people,

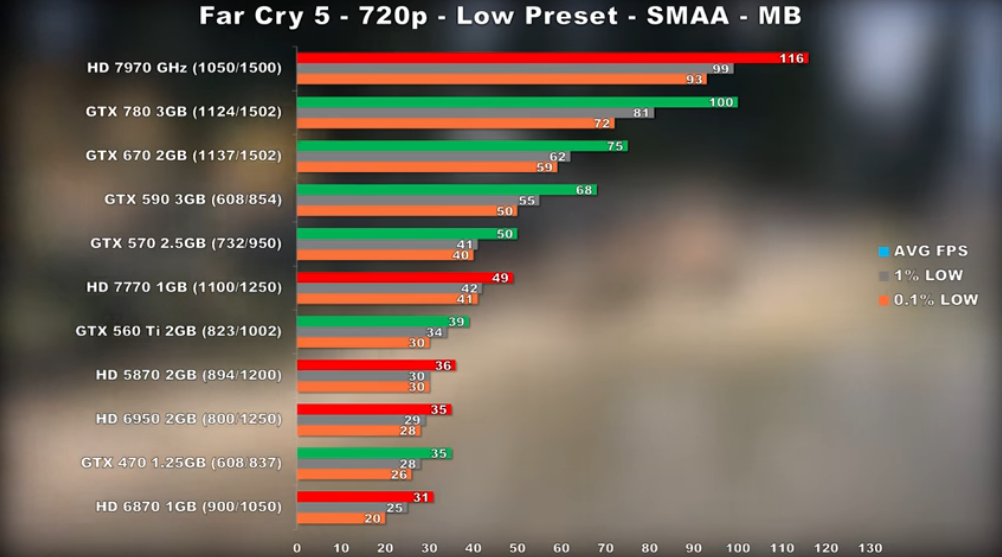

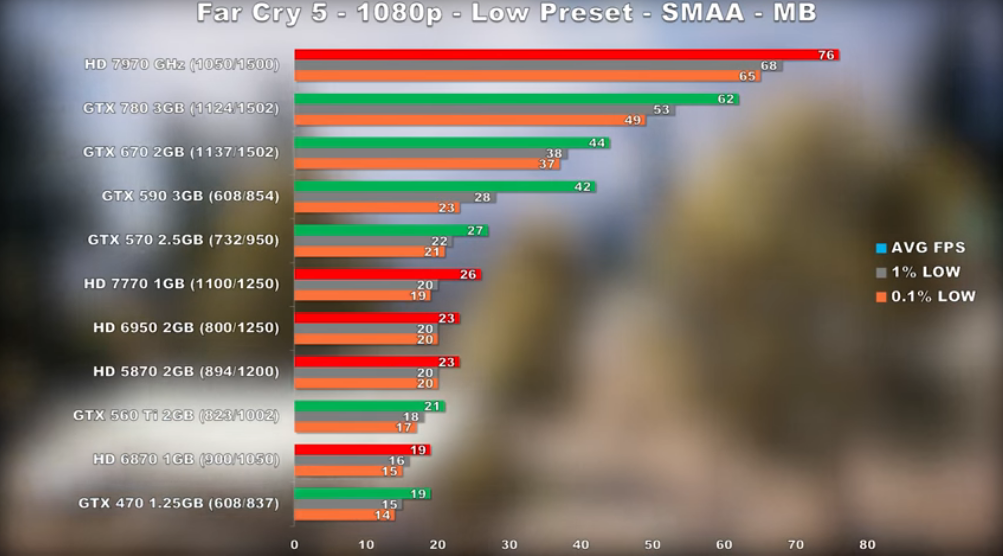

I did some Far Cry 5 benchmarking with some older DX11 cards and wanted to share my results. I tested at 1080p and 720p, using the Low, Normal, and High preset.

Here are the results for the 1080p and 720p Low - the rest of them are in the video -

Test System:

5820K @ 4.0GHz

Asus X99-A

16GB DDR4 3000MHz

W10 Pro 64-bit

Latest NVIDIA + AMD Drivers

15.7.1 Drivers for the HD 5000 and 6000 cards.

Testing Methodology:

Captured 55 seconds of the built-in benchmark using FRAPs. Results shown are not averages over multiple runs, as only consistent runs were used.

Sorry if it's hard to read - took a screen capture of the video.

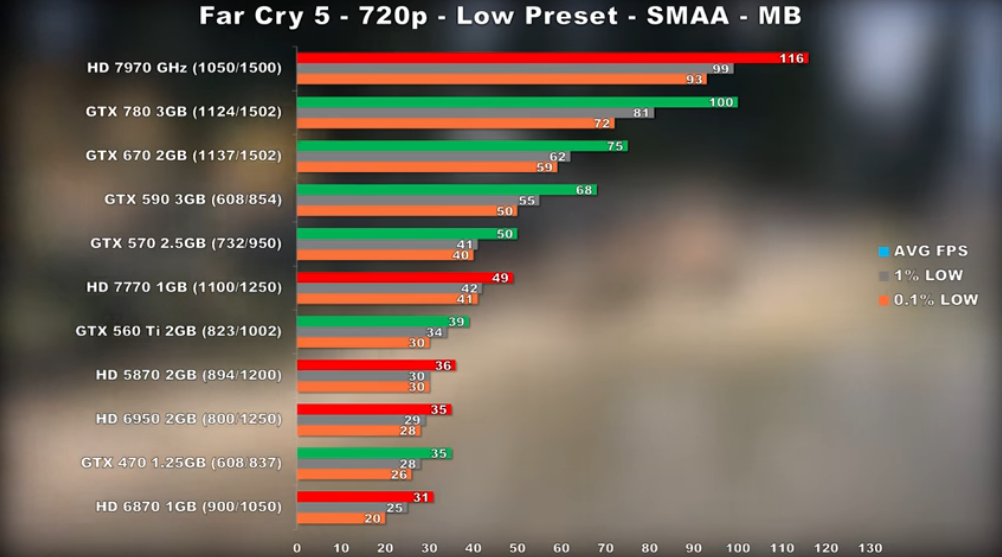

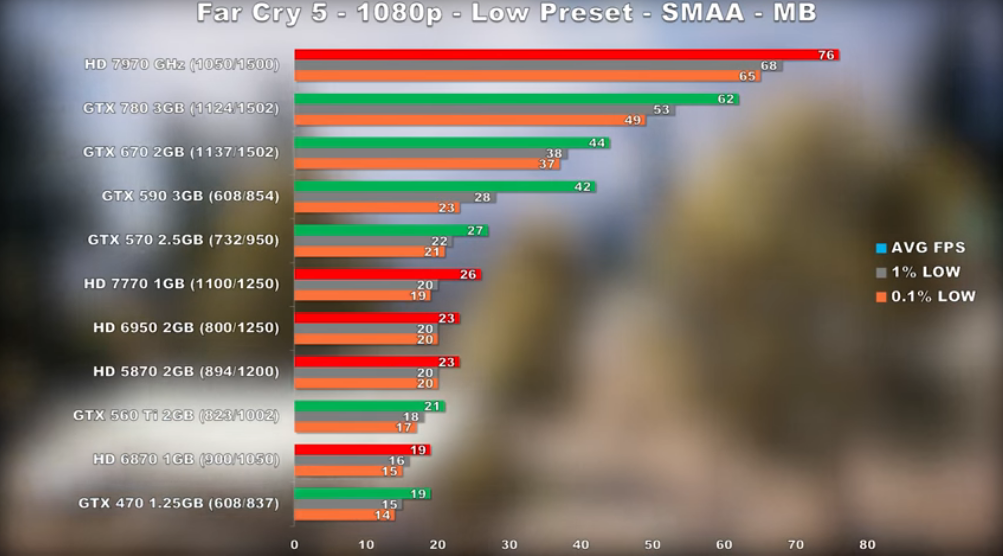

I did some Far Cry 5 benchmarking with some older DX11 cards and wanted to share my results. I tested at 1080p and 720p, using the Low, Normal, and High preset.

Here are the results for the 1080p and 720p Low - the rest of them are in the video -

Test System:

5820K @ 4.0GHz

Asus X99-A

16GB DDR4 3000MHz

W10 Pro 64-bit

Latest NVIDIA + AMD Drivers

15.7.1 Drivers for the HD 5000 and 6000 cards.

Testing Methodology:

Captured 55 seconds of the built-in benchmark using FRAPs. Results shown are not averages over multiple runs, as only consistent runs were used.

Sorry if it's hard to read - took a screen capture of the video.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)