Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,878

So,

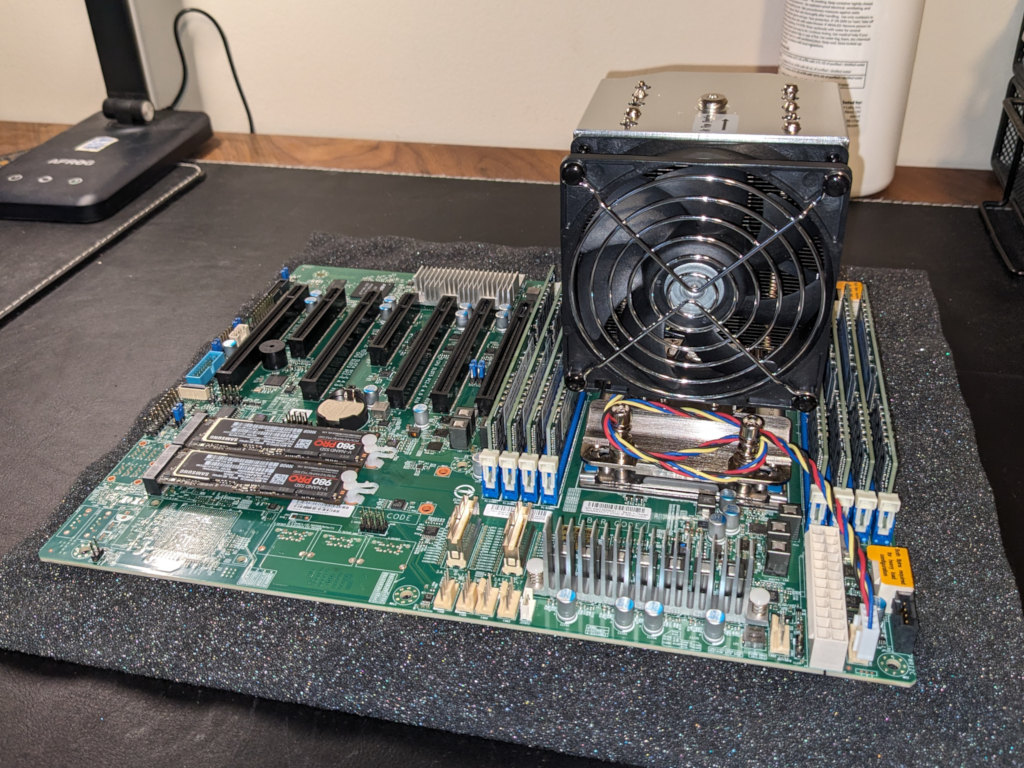

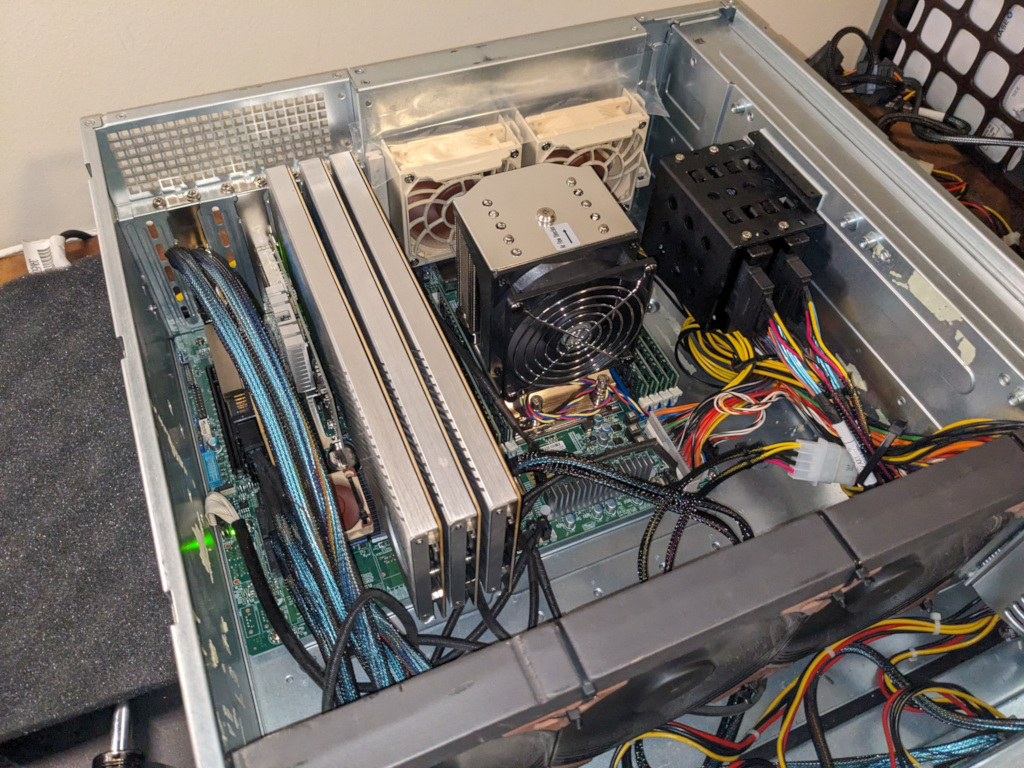

I am in the process of planning an upgrade to the old home VM server. I'm thinking I'm going to go EPYC this time around, and am going to go with one of the many Supermicro h12SSL variants (I'm leaning towards the H12SSL-NT)

I decided to pop this in this subforum as it is for a VM server, and I'll likely not get too much traction in the consumer AMD Motherboard subforum.

I have a few questions regarding these, if anyone has any experience:

1.) If I order these new - like say on Amazon - should I expect there to be a new enough BIOS on there to support Milan chips?

2.) If the firmware isn't new enough, what are my options? Can it flash a BIOS update via the BMC without the help of the CPU? Or should I be buying the cheapest Naples chip I can find on eBay (that inst vendor locked) just so I can have something to flash the BIOS with?

Appreciate any wisdom you folks might be able to share!

I am in the process of planning an upgrade to the old home VM server. I'm thinking I'm going to go EPYC this time around, and am going to go with one of the many Supermicro h12SSL variants (I'm leaning towards the H12SSL-NT)

I decided to pop this in this subforum as it is for a VM server, and I'll likely not get too much traction in the consumer AMD Motherboard subforum.

I have a few questions regarding these, if anyone has any experience:

1.) If I order these new - like say on Amazon - should I expect there to be a new enough BIOS on there to support Milan chips?

2.) If the firmware isn't new enough, what are my options? Can it flash a BIOS update via the BMC without the help of the CPU? Or should I be buying the cheapest Naples chip I can find on eBay (that inst vendor locked) just so I can have something to flash the BIOS with?

Appreciate any wisdom you folks might be able to share!

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)