erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 11,011

Might be able to play Crysis too

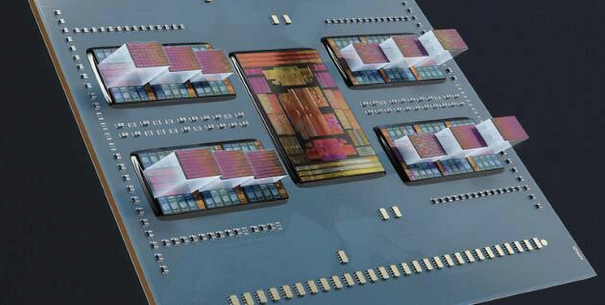

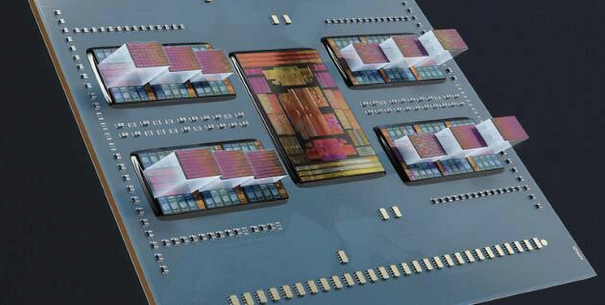

“In addition to doubling the gate density, AMD says the part also offers twice the bandwidth, which translates into a higher effective cloud rate when emulating silicon. Meanwhile, the chip features a new chiplet architecture that places four FPGA tiles in quadrants, which Bauer says helps to reduce latency and congestion as data moves through the chips.

While all of this might sound impressive, anyone who has spent any time playing with emulation will know it tends to be highly inefficient, slow, and expensive compared to running on native hardware, and the situation is no different here.

Emulating modern SoCs with billions of transistors is a pretty resource intensive process to begin with. Depending on the size and complexity of the chip, Bauer says dozens or even hundreds of FPGAs spanning multiple racks may be required, and even then clock speeds are severely limited compared to what you'd find in hard silicon.

According to AMD, while just 24 devices are required to emulate a billion logic gates, it can be scaled out to support up to 60 billion gates at clock speeds in excess of 50MHz.

Bauer notes that the effective clock rate does depend on the number of FPGAs involved. "For example, if you had a piece of IP that can live in a single VP1902, you're gonna see much higher performance," he said.

While AMD's latest FPGA is largely aimed at chipmakers, the company says the chips are also well suited to companies doing firmware development and testing, IP block and subsystem prototyping, peripheral validation, and other test use cases.

As for compatibility, we're told the new chip will take advantage of the same underlying Vivado ML software development suite as the company's previous FPGAs. AMD says it's also working in collaboration with leading EDA vendors, like Cadence, Siemens and Synopsys, to add support for the chip's more advanced features.

AMD's VP1902 is slated to start sampling to customers in Q3 with general availability beginning in early 2024. ®”

Source: https://www.theregister.com/2023/06/27/amd_versal_fpga_emulation/

“In addition to doubling the gate density, AMD says the part also offers twice the bandwidth, which translates into a higher effective cloud rate when emulating silicon. Meanwhile, the chip features a new chiplet architecture that places four FPGA tiles in quadrants, which Bauer says helps to reduce latency and congestion as data moves through the chips.

While all of this might sound impressive, anyone who has spent any time playing with emulation will know it tends to be highly inefficient, slow, and expensive compared to running on native hardware, and the situation is no different here.

Emulating modern SoCs with billions of transistors is a pretty resource intensive process to begin with. Depending on the size and complexity of the chip, Bauer says dozens or even hundreds of FPGAs spanning multiple racks may be required, and even then clock speeds are severely limited compared to what you'd find in hard silicon.

According to AMD, while just 24 devices are required to emulate a billion logic gates, it can be scaled out to support up to 60 billion gates at clock speeds in excess of 50MHz.

Bauer notes that the effective clock rate does depend on the number of FPGAs involved. "For example, if you had a piece of IP that can live in a single VP1902, you're gonna see much higher performance," he said.

While AMD's latest FPGA is largely aimed at chipmakers, the company says the chips are also well suited to companies doing firmware development and testing, IP block and subsystem prototyping, peripheral validation, and other test use cases.

As for compatibility, we're told the new chip will take advantage of the same underlying Vivado ML software development suite as the company's previous FPGAs. AMD says it's also working in collaboration with leading EDA vendors, like Cadence, Siemens and Synopsys, to add support for the chip's more advanced features.

AMD's VP1902 is slated to start sampling to customers in Q3 with general availability beginning in early 2024. ®”

Source: https://www.theregister.com/2023/06/27/amd_versal_fpga_emulation/

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)