In case you have not seen this...

the Radeon R9 Fury X is no less than 31-33% faster than the Nvidia GTX 980 Ti (at 4K)

AMD clobbers Nvidia in updated Ashes of the SingularityFollow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Interesting. I'm curious to see if this advantage for AMD carries over to all DX 12 games. Only time will tell.

At this point I am just glad that AMD has gotten some good publicity. Competition soon would be nice.

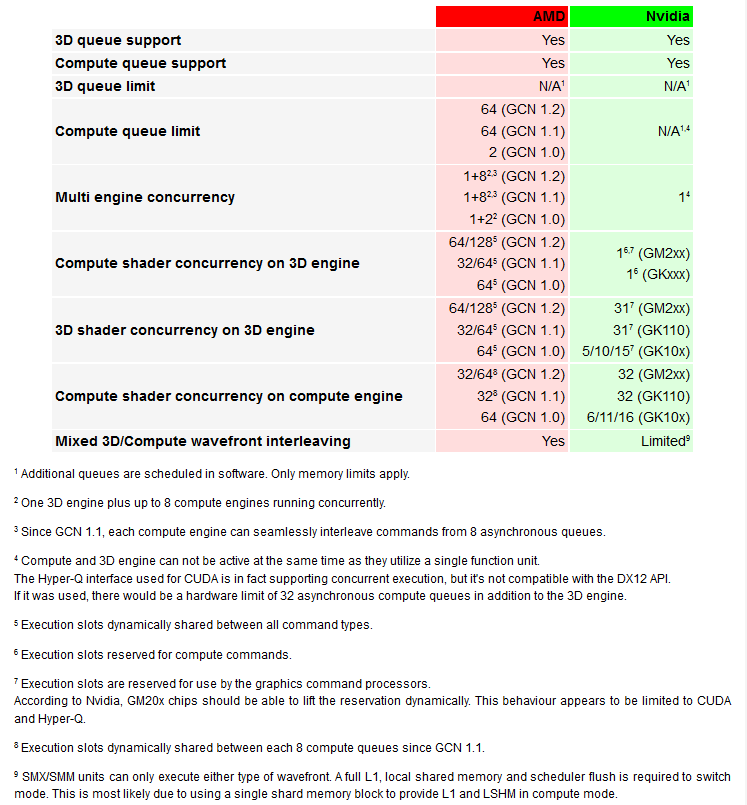

Ok now that is out of the way. So... the question remains: can Nvidias current cards run async in a POSITIVE performance way. Sure they will likely enable a software solution but that will only allow it to enable but not guarantee positive results and not nearly to the degree AMD has. Of course that is standing on whether there is actually a hardware solution on current Nvidia cards (most believe not).Update: (2/24/2016) Nvidia reached out to us this evening to confirm that while the GTX 9xx series does support asynchronous compute, it does not currently have the feature enabled in-driver. Given that Oxide has pledged to ship the game with defaults that maximize performance, Nvidia fans should treat the asynchronous compute-disabled benchmarks as representative at this time. We’ll revisit performance between Teams Red and Green if Nvidia releases new drivers that substantially change performance between now and launch day.

First, some basics. FCAT is a system NVIDIA pioneered that can be used to record, playback, and analyze the output that a game sends to the display. This captures a game at a different point than FRAPS does, and it offers fine-grained analysis of the entire captured session. Guru3D argues that FCAT’s results are intrinsically correct because “Where we measure with FCAT is definitive though, it’s what your eyes will see and observe.” Guru3D is wrong. FCAT records output data, but its analysis of that data is based on assumptions it makes about the output — assumptions that don’t reflect what users experience in this case.

AMD’s driver follows Microsoft’s recommendations for DX12 and composites using the Desktop Windows Manager to increase smoothness and reduce tearing. FCAT, in contrast, assumes that the GPU is using DirectFlip. According to Oxide, the problem is that FCAT assumes so-called intermediate frames make it into the data stream and depends on these frames for its data analysis. If V-Sync is implemented differently than FCAT expects, the FCAT tools cannot properly analyze the final output. The application’s accuracy is only as reliable as its assumptions, after all.

Update: hours before the release of this article we got word back from AMD. They have confirmed our findings. Radeon Software 16.1 / 16.2 does not support a DX12 feature called DirectFlip, which is mandatory and the solve to this specific situation. AMD intends to resolve this issue in a future driver update.

Actually both seem to be playing with words. Directflip is part of DWM but it is the compositing part that is interesting or misleading. Before DWM there was DCE (desktop compositing engine) so that seems misleading. But also there is the requirements of DX12/WDDM 2.0:Well hang on, Guru3D updated their review with the following:

That puts an entirely different narrative than the one that ExtremeTech is insinuating here

Direct3D 12 API, announced at Build 2014, requires WDDM 2.0. The new API will do away with automatic resource-management and pipeline-management tasks and allow developers to take full low-level control of adapter memory and rendering states.

Nvidia performed wonderfully. Very few people buy cards based on future titles, especially for the 2 or so years maxwell was/is king. This allowed nvidia to focus on hardware features that mattered in the current ecosystem.

AMD misperformed. Focused on features that had no bearing in the current marketplace, and therefore lost significant market share. By the time these titles launch, we may very well see pascal, or at worst, the wait for pascal will be relatively short. Pascal will likely have an updated implementation. If true, this was the smartest bet by nvidia.

The /r/AMD sub is having a field-day with this story.

Oh and as expected, there's yet another hate-thread on Kyle in that sub-reddit. The angry 13-year old behavior of /r/AMD never ceases to amaze me haha.

And in true testament to that sub-reddit's true focus; the anti-HardOCP thread is at #2 while the actual benchmark threads are at #10 and #11.

Really, how are your antics better?

Really tired of the childish snideness of people who get joy at calling out other people.

And between the 2 articles, the Extremetech one is at least legible in English. From a journalism standpoint, yeah, that matters in terms of credibility.

Each to their own. AMD is poised for the future, provided they can convince people to take advantage of tech already baked into their cards. They already have a path back in with DX12 adopting Vulkan into itself. But we really won't know until more games come out. Which cooler, smarter heads have already said.

As far as Kyle, or any site for that matter, its my own personal belief that you don't know who really is on the "take" anymore, and even the way the gaming "journalism" industry operates makes me incredibly skeptical of any tertiary sites that could still tie in to that industry. Then again, its not like Kyle could come here and say "I'm clean!" and have a skeptic like me believe him, so I at least appreciate the no-win situation an honest tech journalist can often find themselves in.

This and the fact that Multi-core CPU's benefit from DX12, my FX-8350 machine may keep up with my i7-4790k machine in some games in the future.

is not this same game where intel i3 chip outperform an AMD FX8350 so badly?

Testing DirectX 11 vs. DirectX 12 performance with Stardock's Ashes of the Singularity

Well hang on, Guru3D updated their review with the following:

That puts an entirely different narrative than the one that ExtremeTech is insinuating here

It's funny, because reddit is having a field day but [H] gave the Nano a gold award.

Conclusion

Most people were pissed because what AMD tried to do was straight up corrupt the entire review industry. Give us good reviews or we'll cut you off.

There's always some kind of bias but [H] seems to have always been as in the middle as possible and to me gives praise where it's deserved.

yeah, no.I upgraded from an i3 to an FX8320E recently, and gaming performance went from barely able to run a game at 1080p on minimum settings to being able to max games out across three screens while running multiple game servers at the same time. All parts other than mobo and processor were held constant.

yeah, no.

yeah, no.

little to no difference between CPUs in a GPU constrained workload. wow, what an incredible observation you've made.Yeah, yes. I am on a 6700k at home now from an FX 8350 well doing 4k and guess what? There is little to no difference in gaming. Mind you, other things feel a little quicker but that could be because of the newer chipset, DDR4 and improved IMC more than anything else. I like the upgrade but, I have been regretting it for a bit here and there because of the $800 I spent but did not need to yet.

what i3?Believe it or not, but it's true. Better yet, GPU-only tests ran exactly the same, only in games where the CPU was needed (aka all of them) did performance drop.

what i3?

Interesting and I would love to see more development to this. I was always Nvidia guy, but this can only be a good thing, back to competition, cheaper prices, more power, more choices, victory for consumers!

This instance isn't typical for sure in the fact that the developer is interested in making a cutting edge game using new techniques. I don't think they are interested in the slightest as to who sold what or what share of the market has what hardware. Sure it must be considered but it seems to me with their forthright nature thus far and the fact they have been the only ones to tout new features and abilities now accessible with DX12, that market has little to do with it.Out of curiosity. Would a company jump to hardware that has lower market saturation in a market that's already beginning to stagnant?

there are so many things wrong with what you've said i really don't even want to bother. in games where the 8320 can be used to its potential, which is a very limited set, yes it would be quite a bit faster. in the vast majority of games which use 4 or less threads i don't, in any way, see how there could be a substantial difference between the two as the 4130 is 46% faster than the 8320 per thread.Haswell. i3-4130

But you can OC the 8320, a great deal getting to 4.4Ghz (seeing 4.6Ghz as avg but not feeling that) which in most cases would overcome any real short comings. Not the least of which, the i3 might likely be paired with a less than stellar Mobo based on cost and the fact it is an i3, whereas the 8320 would require better than a basement variety Mobo to operate with any amount of decency. Just outright denying a claim based on just the processors is shortsighted, just as much as stating there is a huge difference when little is known of the whole platform. And sorry to say but anyone recommending an i3 for gaming over even an 8320 is justthere are so many things wrong with what you've said i really don't even want to bother. in games where the 8320 can be used to its potential, which is a very limited set, yes it would be quite a bit faster. in the vast majority of games which use 4 or less threads i don't, in any way, see how there could be a substantial difference between the two as the 4130 is 46% faster than the 8320 per thread.

i think these convenient videos illustrate my point pretty well: 4130 and 8320 with a 280X, 4130 and 8320 with a GTX 960. of course the 8320's performance will increase in the future but you're talking about right now as well as in a much more GPU limited scenario; what you're saying is an absurd exaggeration.

But the game releases next month. And I seriously doubt it is because they don't care. It is far more likely that they really cant do async and DX12 will put AMD in a far better performance position. Hence why they are saying nothing and likely why they have the function turned off at the driver level.I don't think nVidia gives a damn right now about spending resources to shove out a pissing contest driver for an in-development game in order to showcase a beta level test to the public on current hardware that will be "old news" when said game actually gets released because the next gen GPUs will be on the shelves...and I can't say that I blame them one bit. I'll wait for multiple DX12 titles to get tested on Arctic Islands and Pascal before I get all giddy over it.

there are so many things wrong with what you've said i really don't even want to bother. in games where the 8320 can be used to its potential, which is a very limited set, yes it would be quite a bit faster. in the vast majority of games which use 4 or less threads i don't, in any way, see how there could be a substantial difference between the two as the 4130 is 46% faster than the 8320 per thread.

i think these convenient videos illustrate my point pretty well: 4130 and 8320 with a 280X, 4130 and 8320 with a GTX 960. of course the 8320's performance will increase in the future but you're talking about right now as well as in a much more GPU limited scenario; what you're saying is an absurd exaggeration.

But you can OC the 8320, a great deal getting to 4.4Ghz (seeing 4.6Ghz as avg but not feeling that) which in most cases would overcome any real short comings. Not the least of which, the i3 might likely be paired with a less than stellar Mobo based on cost and the fact it is an i3, whereas the 8320 would require better than a basement variety Mobo to operate with any amount of decency. Just outright denying a claim based on just the processors is shortsighted, just as much as stating there is a huge difference when little is known of the whole platform. And sorry to say but anyone recommending an i3 for gaming over even an 8320 is just.

But the game releases next month. And I seriously doubt it is because they don't care. It is far more likely that they really cant do async and DX12 will put AMD in a far better performance position. Hence why they are saying nothing and likely why they have the function turned off at the driver level.

Look at performance in recent current titles. You'll see that the trend is already happened. No waiting for future games. And not everyone upgrades every generation. People who bought Kepler got a short lived card and it's looking like Maxwell is the same. I wouldn't be happy with that if I'd bought a 780, then upgraded to a 980 and realized I could have bought a 290X and been in the same (or now better) performance category all along without replacing it.Nvidia performed wonderfully. Very few people buy cards based on future titles, especially for the 2 or so years maxwell was/is king. This allowed nvidia to focus on hardware features that mattered in the current ecosystem.

AMD misperformed. Focused on features that had no bearing in the current marketplace, and therefore lost significant market share. By the time these titles launch, we may very well see pascal, or at worst, the wait for pascal will be relatively short. Pascal will likely have an updated implementation. If true, this was the smartest bet by nvidia.

wutLook at performance in recent current titles. You'll see that the trend is already happened. No waiting for future games.