CookieFactory

Limp Gawd

- Joined

- May 17, 2006

- Messages

- 359

If the 5090 is $2500 but can still fit in my Fractal Terra then sign me up.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

I certainly do not recall it being discounted that hugely a month or two after release. Perhaps others can chime in about it. Nearly 6 months after release the 4090 came out, which is one reason it was not a very good buy at the time.It was discounted by $1000 like 6 months after release. If I recall correctly it was discounted to like $1500 or $1600 a month or two after release.

I don't think the 3090 Ti was officially discounted a month or two after its release. It was more like a month or two before the 4090 release. A typical move when you're trying to move inventory to make room for incoming product.I certainly do not recall it being discounted that hugely a month or two after release. Perhaps others can chime in about it. Nearly 6 months after release the 4090 came out, which is one reason it was not a very good buy at the time.

I found this which was 2.5 months after release.I certainly do not recall it being discounted that hugely a month or two after release. Perhaps others can chime in about it. Nearly 6 months after release the 4090 came out, which is one reason it was not a very good buy at the time.

That's how I'm hopimg this will play out. Makes the most sense based on Nvidia's experience with the previous gen....The 5090 will not be above $2000, likely $1500-$1800. The 5080 will be $1000. Nvidia knows how much enthusiasts and high end buyers are willing to pay. The 4080 Super made it obvious. The original 4080 did not meeting sales expectations, they were testing the waters. I wouldn't expect a 5080 to be even close to a 5090 in performance though, if anything it will be a larger gap in performance than the 4080 and 4090.

That seem just impossible to tell, what money will do, what the next 2 generation of Nvidia card look like, what 24GB and that kind of AI performance will be worth and so on, but the going to be 4 years old used 3090 are quite far to get that low despite how much better the 4090 was, if it can be used for projection:What year is the 5090 going to hit $500?

You hit the nail on the head with “a couple of gens.” That’s AMD’s problem in a nutshell. They’ve never knocked it out of the park twice in a row so they’ve never stolen durable mindshare from NVIDIA. Even when they’re on a roll, there’s always some bonehead move like the $900 7900 XT vs $1,000 XTX, RDNA 3’s early driver problems, and the way they left so much clockspeed headroom on the table. These things get mostly cleaned up before the generation is over, but the cards don’t get re-reviewed and so the early problems determine their fate long after the problems are fixed.As well as dlss upscaling quality, frame gen in many new games, and overall (perceived or not) driver quality. It would take a couple of gens for me to trust things have changed with AMD.

Thus far they have not, so I'll be in for a 5xxx series Nvidia card. The only question is price as to whether it's an 80 or a 90 really. If the 90 is in line with the last two generations on price ($1500ish) I'll almost definitely grab one. Of course, as you noted, if the 80 is performant enough and the pricing attractive enough, I'm not going to buy more than I need.

Hm? RT is quite noticeable in some titles, especially Hogwarts Legacy. It can look quite nice.. And as an antialiasing technology, DLAA runs absolute circles around any other AA I've tried in almost any title (both performance wise and looks wise). DLAA makes older games like No Man's Sky basically look like they got a generational upgrade. If you own No Man's Sky, try it yourself. Go turn on DLAA (or even DLSS) and then come back to me. DLSS also makes cards relevant for far longer than they have any business being relevant for via the huge FPS uplifts (granted with some tradeoffs).NVIDIA also knows how to inject meaningless ideas into people’s heads to make them think they can’t go with the competition. Honestly, how many people actually turn on RT and leave it on even with RTX 4x00 cards, much less in 2019 when people thought they had to buy a 2080 Ti so they could trace those sweet rays? This coming year it will be AI extensions that everyone talks about and almost no one actually benefits from.

If like the 3080/3090 they are on the same chip and they want all the best die to be in the pro-line product, I am not sure if it would be dumb.A 5080 launching first sounds incredibly dumb to me but we'll see, it isn't like Nvidia has never made dumb moves before.

If like the 3080/3090 they are on the same chip and they want all the best die to be in the pro-line product, I am not sure if it would be dumb.

But that would sound completely in opposition with all the rumors about the gap between the 5080 and 5090...... or maybe the 5090 will be too glued 5080 together, making releasing the 5080 first not an issue and inline with the rumours of their big relative gap.

People know anything certain about any of this ? Or that the code name for glued GB203 would not be GB202 ? Do we have source that it is definitive that there is no MCM in play like they did on the B200 line ?They are not. 5090 is GB202 while 5080 is GB203.

People know anything certain about any of this ? Or that the code name for glued GB203 would not be GB202 ? Do we have source that it is definitive that there is no MCM in play like they did on the B200 line ?

Since the rumours of the GB202 being the double than the GB203 (bandwith, core count, etc...) and nvidia launching MCM blackwell announcement was made, it is an obvious rumours to spread.What? GB202 is now rumored to be an MCM of GB203? Lol these rumors are something else.

Since the rumours of the GB202 being the double than the GB203 (bandwith, core count, etc...) and nvidia launching MCM blackwell announcement was made, it is an obvious rumours to spread.

I have never seen a statement that it would not be the case ?

I start selling my ass on the side of the road 2 months before every new GPU release....its become a family tradition!I truly cannot wait for the $10k 8090 cards to show up! What a time to be alive.

longitude/latitude?I start selling my ass on the side of the road 2 months before every new GPU release....its become a family tradition!

The only thing for certain is the scalpers will be there right on the first release, whatever card it happens to be. And some morons will go on ebay and pay them.longitude/latitude?

Lotso 3080 owners like myself who skipped the 4xxx waiting for flagship 5xxx. Going to be nasty on release trying to get these.

Dumb rumor. Full AD102 is almost double the size of AD103 in terms of CUDA core count. Not quite, but pretty close. But likewise has the 384-bit vs the 256-bit.Since the rumours of the GB202 being the double than the GB203 (bandwith, core count, etc...) and nvidia launching MCM blackwell announcement was made, it is an obvious rumours to spread.

I have never seen a statement that it would not be the case ?

Lot of 512 bits/with twice the vram floated around:But likewise has the 384-bit vs the 256-bit.

See the tweet, kopite7 is a popular leaker I think. And obviously the already announced datacenter lineup doing it and Apple having been doing it for a long time being a vague suggestion of that direction being possible (at least make it very easy to start rumour)I don't see anything here to suggest that 2x GB203 somehow is now a GB202

Lot of 512 bits/with twice the vram floated around:

https://www.notebookcheck.net/Nvidia-GeForce-RTX-5090-Flagship-GB202-GPU-to-feature-512-bit-wide-bus.811948.0.html#:~:text=and support us!-,Nvidia GeForce RTX 5090: Flagship GB202 GPU,feature 512-bit wide bus&text=Kopite7kimi has confirmed Nvidia's top,on the GeForce RTX 5090.

Same for core count (and MCM talk because of it):

https://twitter.com/kopite7kimi/status/1767083262512615456

See the tweet, kopite7 is a popular leaker I think. And obviously the already announced datacenter lineup doing it and Apple having been doing it for a long time being a vague suggestion of that direction being possible (at least make it very easy to start rumour)

Pfft. Last 512-bit consumer GeForce product was in the 200-series in 2008. Sure there was a Titan with HBM, I'm not counting that. I just don't buy it.Lot of 512 bits/with twice the vram floated around:

https://www.notebookcheck.net/Nvidia-GeForce-RTX-5090-Flagship-GB202-GPU-to-feature-512-bit-wide-bus.811948.0.html#:~:text=and support us!-,Nvidia GeForce RTX 5090: Flagship GB202 GPU,feature 512-bit wide bus&text=Kopite7kimi has confirmed Nvidia's top,on the GeForce RTX 5090.

Same for core count (and MCM talk because of it):

https://twitter.com/kopite7kimi/status/1767083262512615456

See the tweet, kopite7 is a popular leaker I think. And obviously the already announced datacenter lineup doing it and Apple having been doing it for a long time being a vague suggestion of that direction being possible (at least make it very easy to start rumour)

I think I am missing the point here ? I do not think the leaker is implying that a monolithic die cannot have a 512 bits bus here, the interesting point was being the doubleWe have had 512 bit memory GPUs in the past like the R9 290X

Not really chiplet, more like Apple M ultra or Nvidia GB200 and it could just be for the 5090 with all the rest being monolithic (if the talk of future TSMC node limiting max size, they could be forced for the top of line to do it soon).what is counted as a core is not always almost really subjective)For one, wouldn't you then be able to use a smaller die with multiple chiplets? None of the rumored dies seems out of place with how the previous 40-series went which screams monolithic to me.

That would be the smart money, letting the GDDR6x->7 do all the bandwidth increase with better cache tech continuing to make it matter less... and by the time the super launch, maybe the different sized gdrr7 module will give flexibility for the quantity.My money is on it going to be another 384-bit 24GB 5090, 256-bit 16GB 5080, 192-bit 12GB 5070, etc.

I think I am missing the point here ? I do not think the leaker is implying that a monolithic die cannot have a 512 bits bus here, the interesting point was being the double

Not really chiplet, more like Apple M ultra or Nvidia GB200 and it could just be for the 5090 with all the rest being monolithic (if the talk of future TSMC node limiting max size, they could be forced for the top of line to do it soon).what is counted as a core is not always almost really subjective)

That would be the smart money, letting the GDDR6x->7 do all the bandwidth increase with better cache tech continuing to make it matter less... and by the time the super launch, maybe the different sized gdrr7 module will give flexibility for the quantity.

Because that level of jump on the gen that should increase the bandwith with gddr7 instead of staying 384bits is a bit strange if that not the reasson and why not ? Nvidia just launched a MCM product the same generationThat is exactly the point...just because it's 512 bit which is exactly double of 256, why would he even bring up the possibility of MCM if 512 bit is already possible on a monolithic die?

Because that level of jump on the gen that should increase the bandwith with gddr7 instead of staying 384bits is a bit strange if that not the reasson and why not ? Nvidia just launched a MCM product the same generation

Apple will have what 2-3 generation of MCM gpu in the field by then...

I would put just around 95-97% chance you will be right, but I am not sure where does the massive performance gain without much of a node change will come from...ok thenI'll check back in a few months when the 5090 ends up NOT being MCM.

I would put just around 95-97% chance you will be right, but I am not sure where does the massive performance gain without much of a node change will come from...

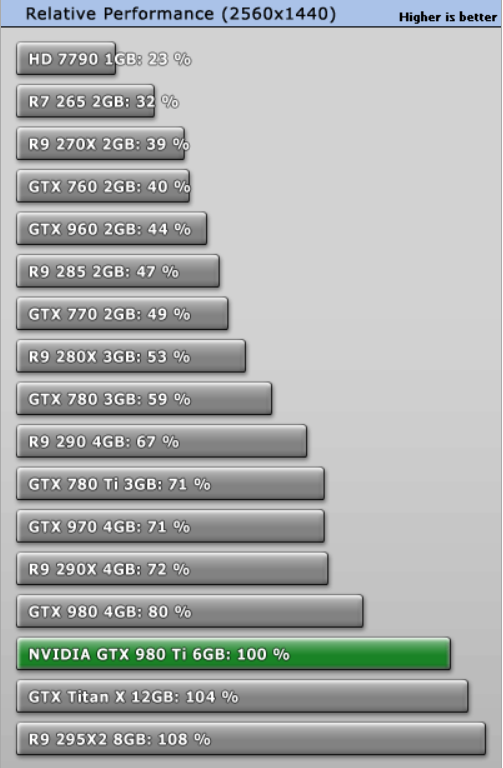

Maxwell was awesome.This has been discussed on this thread already. A node shrink is nice, but it is not a mandatory requirement for a major performance uplift. 780 Ti -> 980 Ti proves this. Same 22nm node but 50% performance increase.

View attachment 652645

I do get where you’re coming from, and I’m sure there are plenty of people who use and appreciate RT in 2024. In my post I was talking mainly about RT in 2019 when the 2x00 series was on the market. By AI extensions I meant something similar to CUDA where NVIDIA cards accelerate local AI with some kind of slick application that AMD cards can’t use.Hm? RT is quite noticeable in some titles, especially Hogwarts Legacy. It can look quite nice.. And as an antialiasing technology, DLAA runs absolute circles around any other AA I've tried in almost any title (both performance wise and looks wise). DLAA makes older games like No Man's Sky basically look like they got a generational upgrade. If you own No Man's Sky, try it yourself. Go turn on DLAA (or even DLSS) and then come back to me. DLSS also makes cards relevant for far longer than they have any business being relevant for via the huge FPS uplifts (granted with some tradeoffs).

So if you're talking about those features with the statement "AI extensions that everyone talks about and almost no one actually benefits from", I can't really do anything but disagree. This AI stuff is really powerful and really nice for card (and game) longevity. AMD does need to catch up, that's all there is to it. Almost every subjective test will note that AMD's equivalent to Nvidia's feature in that space is just flat out worse. Certainly not unusable, but worse.

28nm. 22nm was an Intel process.This has been discussed on this thread already. A node shrink is nice, but it is not a mandatory requirement for a major performance uplift. 780 Ti -> 980 Ti proves this. Same 22nm node but 50% performance increase.

View attachment 652645

This is my exact same sentiment and situation. I was ready to move to the 4k series on launch day (4080-4090, I was undecided), but after those prices and the ROI performance-wise, no thanks, I'll keep my money and sit this gen out.There's a lot of doom and gloom around GPU pricing, and not without reason, but at the end of the day NVIDIA's objective is to turn a profit, and the $1,200 RTX 4080 didn't do that because few people bought one. They had to reintroduce the same card under a new name so they could cut the price to $999 without losing face. They're not going to jack the x80 price again because the market just taught them a very expensive lesson. The 4090, OTOH, was actually kind-of underpriced at $1,600. They could have charged $2k and still sold out given the huge performance uplift, excellent efficiency vs last-gen, and the AI boom with people seeking cheaper alternatives to pro-level cards. This is why rumors show such a huge difference in CU's between the 5080 and 5090, IMO. One is destined for a $999 price level, and the other is a Titan-level beast costing at least $2k.

Personally, I sat out this whole generation because I just couldn't justify the insane prices for any card that would have been a noticeable upgrade from my 6800 XT, meaning both companies earned zero profit from me, even though I was ready and excited to pay $800 until I saw how little they offered in return. I might pick up a 7900 XTX this year if I can snag a used one for $750 or less, or maybe a 7900 XT or GRE for $600 and $500 respectively. Otherwise, I'll just keep grumbling along running my racing sims and UEVR games in Virtual Desktop at a locked 36 or 45 fps on medium settings and 5k resolution. Even a 4090 can't run them maxed at native 6k 120 fps (and I doubt a 5090 will be able to either), so I'm not willing to overspend if I still have to make compromises.

Eye tracking would be another one of those tech that (specially if the trend of monitor getting bigger for gamer continue and obviously already there for VR), there 1-2 degree of vision that need to be high resolution (and some margin of error around), specially if the system get 1000 fps fast to react quick enough to a fast eye change, we could be in 300 dpi on a very small portion of the screen and everything else in some old school 720p density and quality I would imagine, where our eye only see movement and bright change of light.And AI reconstruction and frame gen make perfect sense when you think about it for a minute: there's an horrifying amount of GPU/CPU cycles wasted on things human cannot see/perceive whatsoever (it's why those techs work so well, even if they're not perfect yet), so it's essentially just more software optimization and it makes the dream of 1000fps/1000hz + ultra high resolution actually imaginable in our lifetime.