ChrisUlrich

Weaksauce

- Joined

- Aug 4, 2015

- Messages

- 117

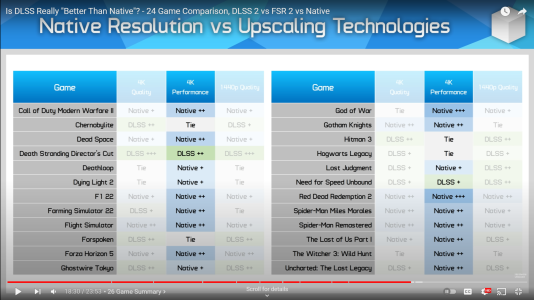

I have heard a lot of conflicting information regarding the use of DLSS.

Some people say that using DLSS 2.0 or 3.0 (3.0 for me soon as I just got a 4090) barely hurts visuals. Not noticeable at all.

Others say that you can't experience "true 4K" with DLSS. It just hurts the quality too much.

Just want to talk about it with some people. I always use DLSS since I can't run anything at 4K with my 3080 unless i have that enabled. But it seems that I won't even be able to run games on Ultra at 4K even with the 4090. DLSS is a must if I want to achieve 4k @ 120FPS.

Are you better off living at 60fps without DLSS or 120fps with DLSS?

Some people say that using DLSS 2.0 or 3.0 (3.0 for me soon as I just got a 4090) barely hurts visuals. Not noticeable at all.

Others say that you can't experience "true 4K" with DLSS. It just hurts the quality too much.

Just want to talk about it with some people. I always use DLSS since I can't run anything at 4K with my 3080 unless i have that enabled. But it seems that I won't even be able to run games on Ultra at 4K even with the 4090. DLSS is a must if I want to achieve 4k @ 120FPS.

Are you better off living at 60fps without DLSS or 120fps with DLSS?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)