German Muscle

Supreme [H]ardness

- Joined

- Aug 2, 2005

- Messages

- 6,951

Build Name: undecided yet, open to suggestions

Purpose: This machines intended use is a server VMs that require really fast CPUs and storage for testing VMs between regular server and a super high frequency CPU/fast storage to see how it changes or if it does. I selected hardware that is versatle for me in the way of this use or if i take it down i can use it as another system or can have OC fun with it. I wont ever get rid of it so if i stop using it then i will just display the board on a shelf. This is also my first foray into watercooling in the enterprise and/or homelab. So this is also wrapping my mind around that and trying to plan for it and scenarios if something goes sideways.

Status: Some parts on hand, acquiring parts, planning measuring.

PC Parts List

Case: Alphacool ES 4u Rackmount Case

CPU: Intel Xeon W-7175x 28c/56t Processor

MB: EVGA SR3 Dark Motherboard

RAM: 6x16GB G Skill Trident Z 3600 DDR4

GPU: Asrock Arc 380

Boot SSD: Micron 256GB M.2

VM Storage: Asus Hyper M2 Card/ 4x WD Black SN850x 1TB in Raid 10

PSU: Corsair HX1000i

NIC: Intel XL710-QDA2 Network Card

WC Parts List

CPU Block: EKWB Annihilator Pro

Radiator: Alphacool XT45 360

Reservoir: Alphacool ES 4u Front res with D5 top

Pump: Either XSPC D5 with sata power or Optimus/Xylem D5 with sata power

Fittings: Bitspower Black. Still working out the details on this as im unsure if im going to run the cpu block & motherboard block in series or parallel.

Tubing: EPDM 1/2x3/4

Fans: Noctua NF-F12 Industrial 3000 x8

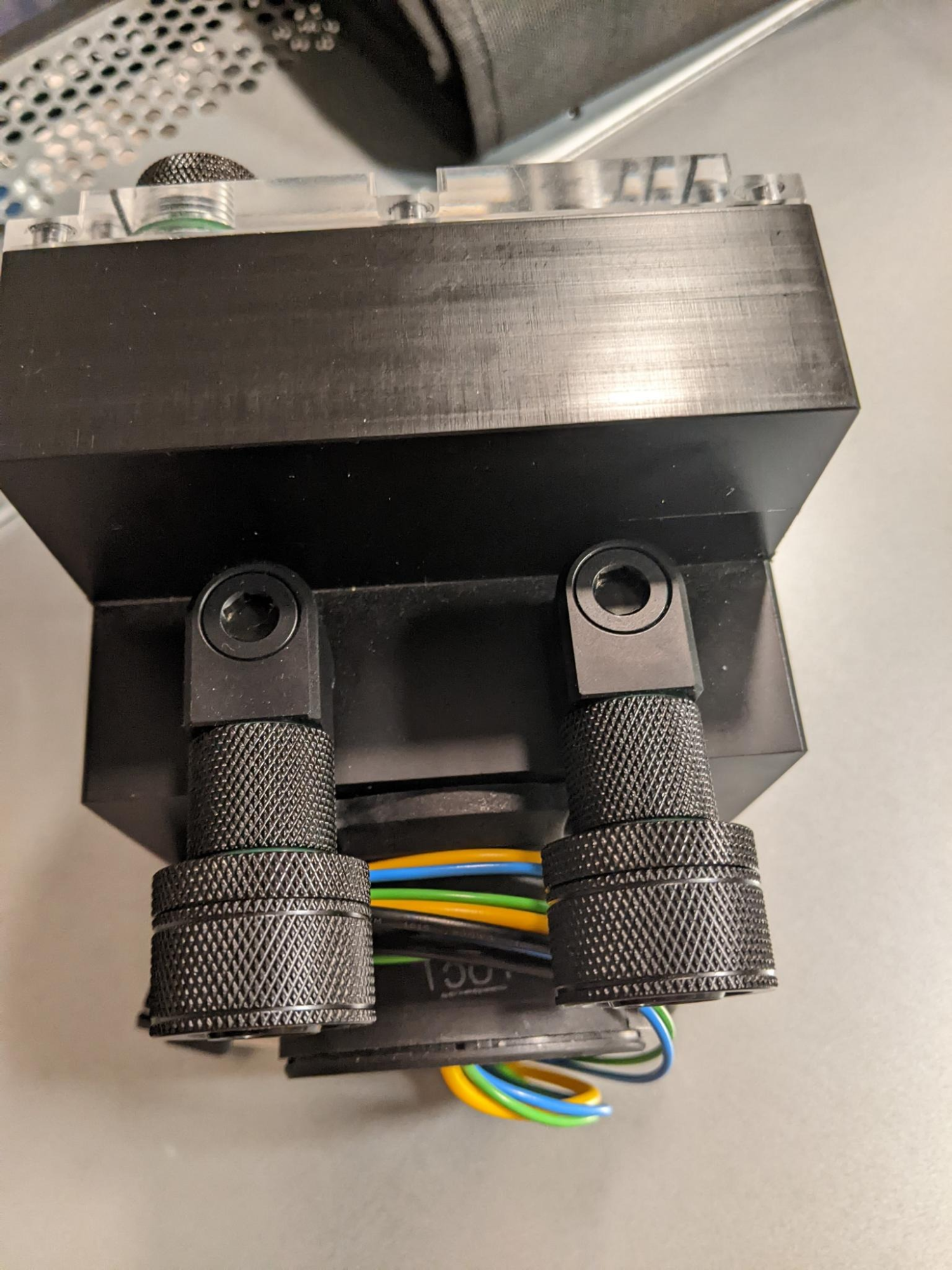

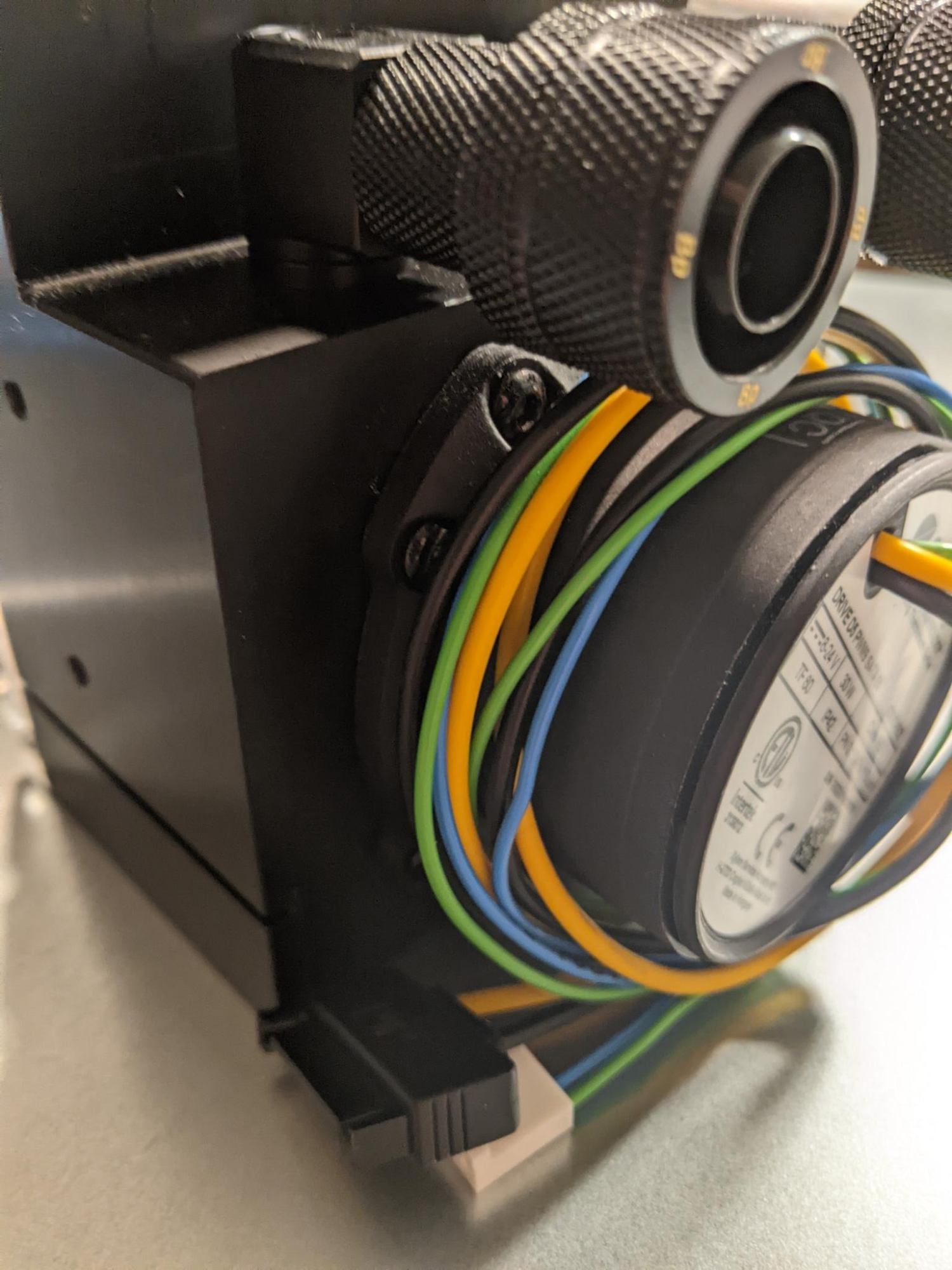

I got the case in after a long wait for restock at Performance PCs.

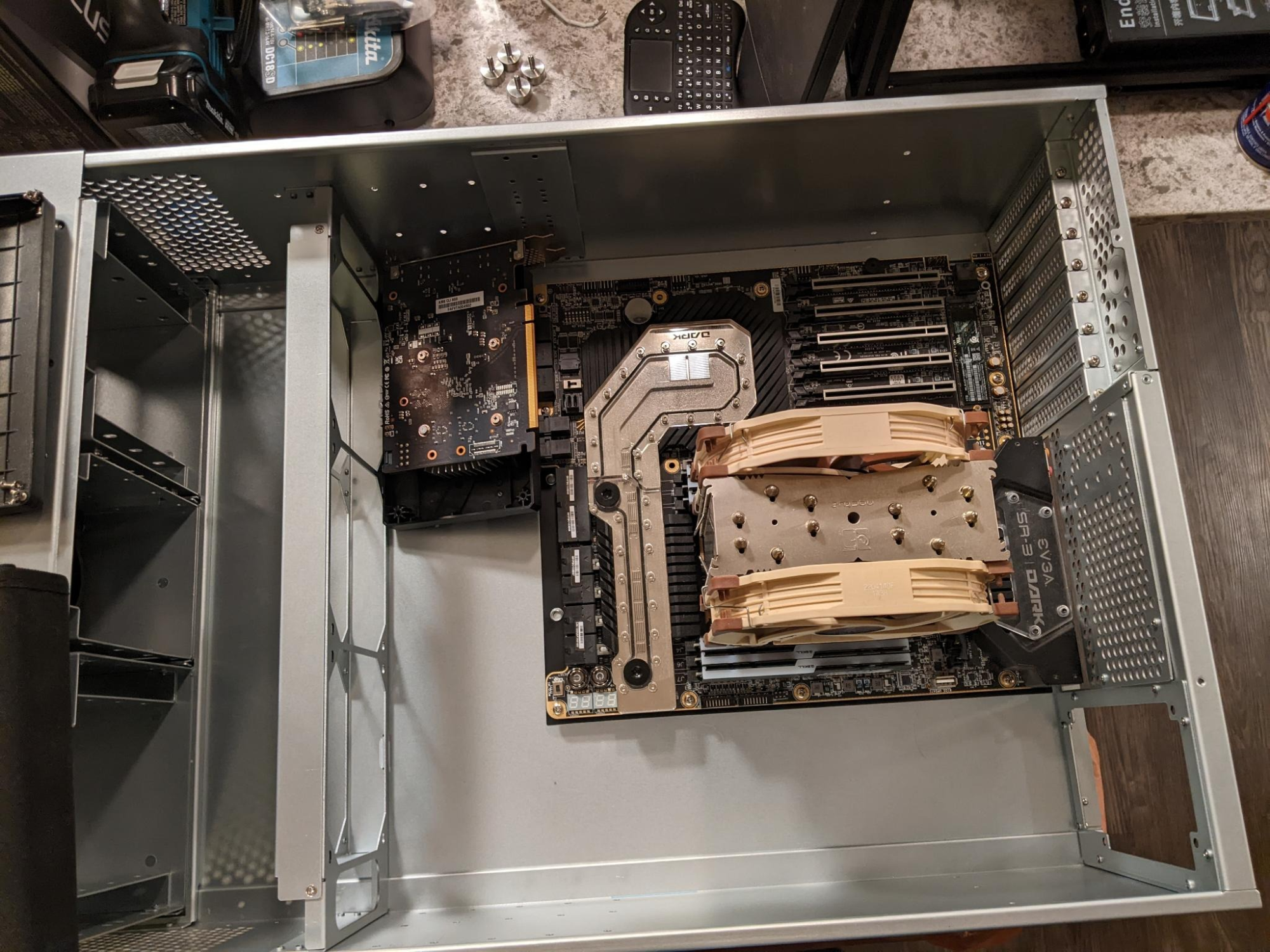

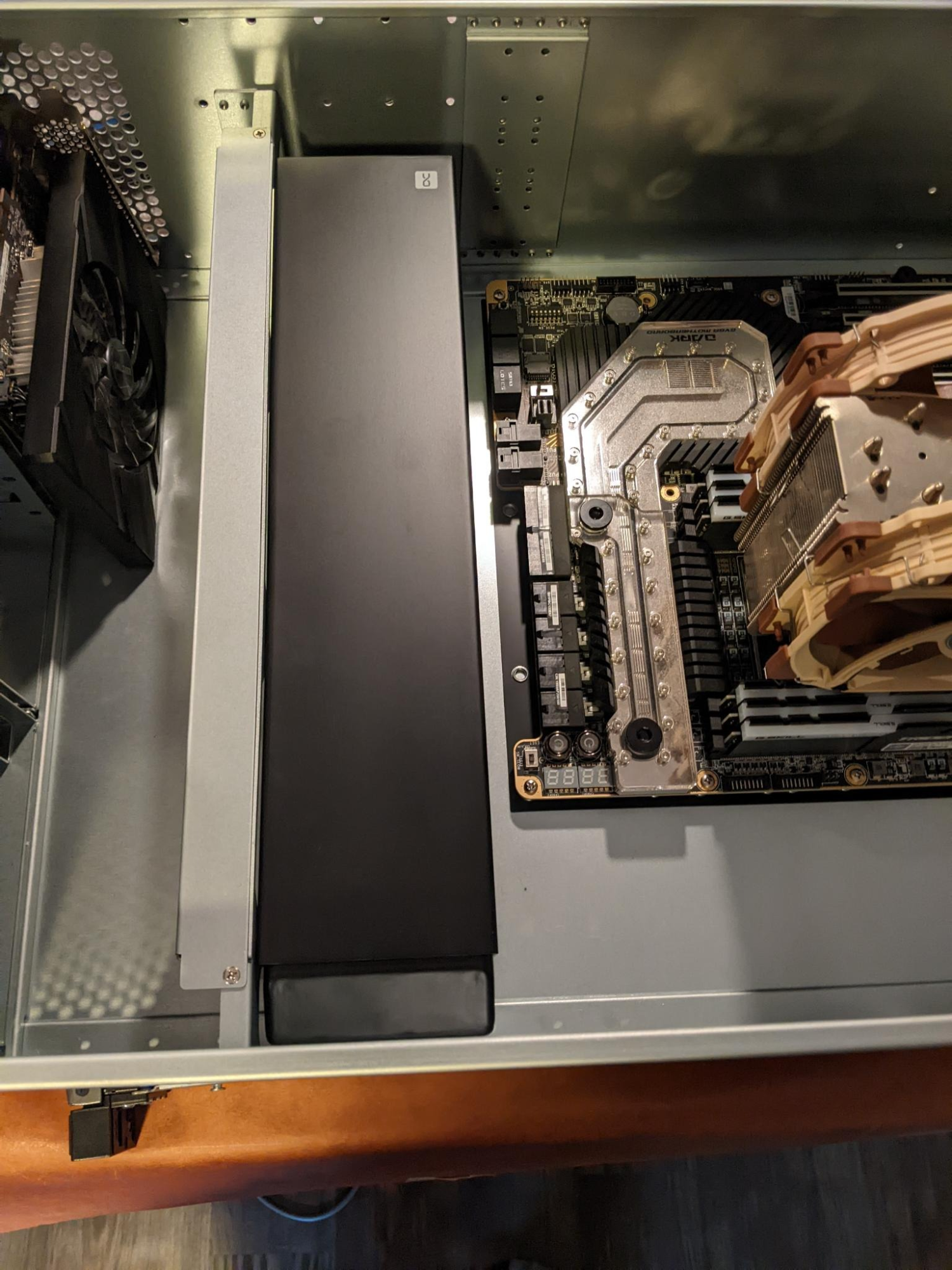

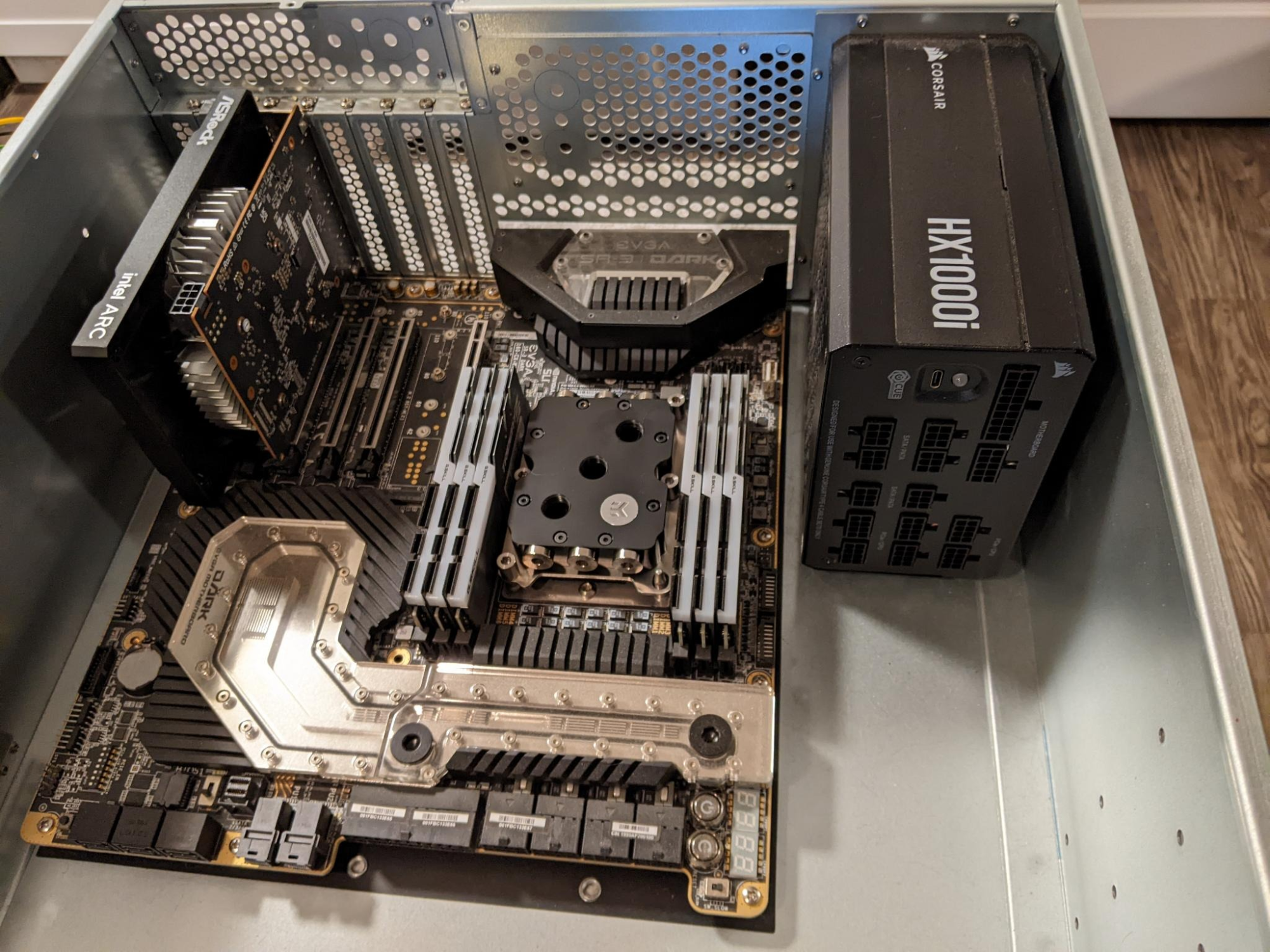

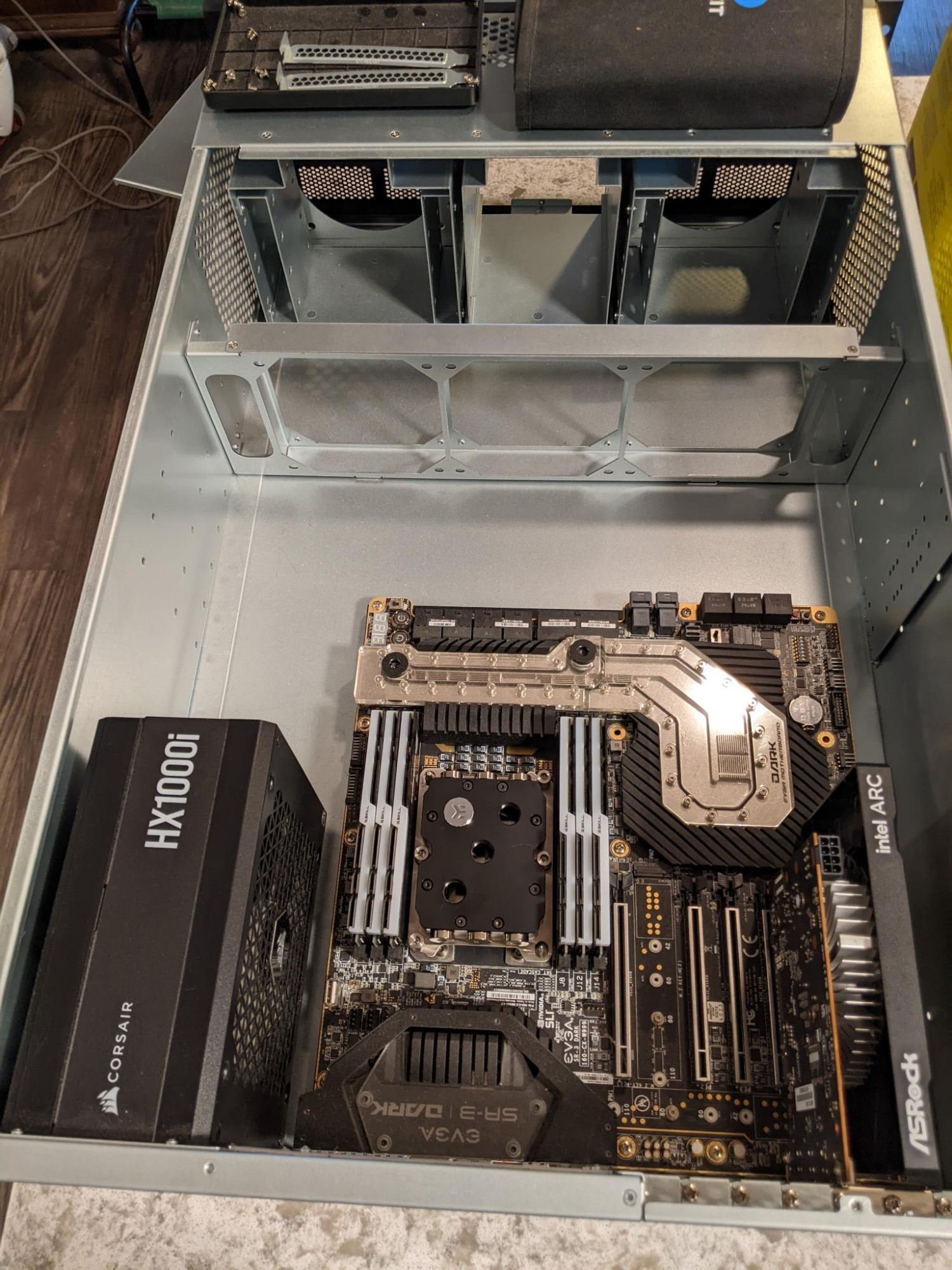

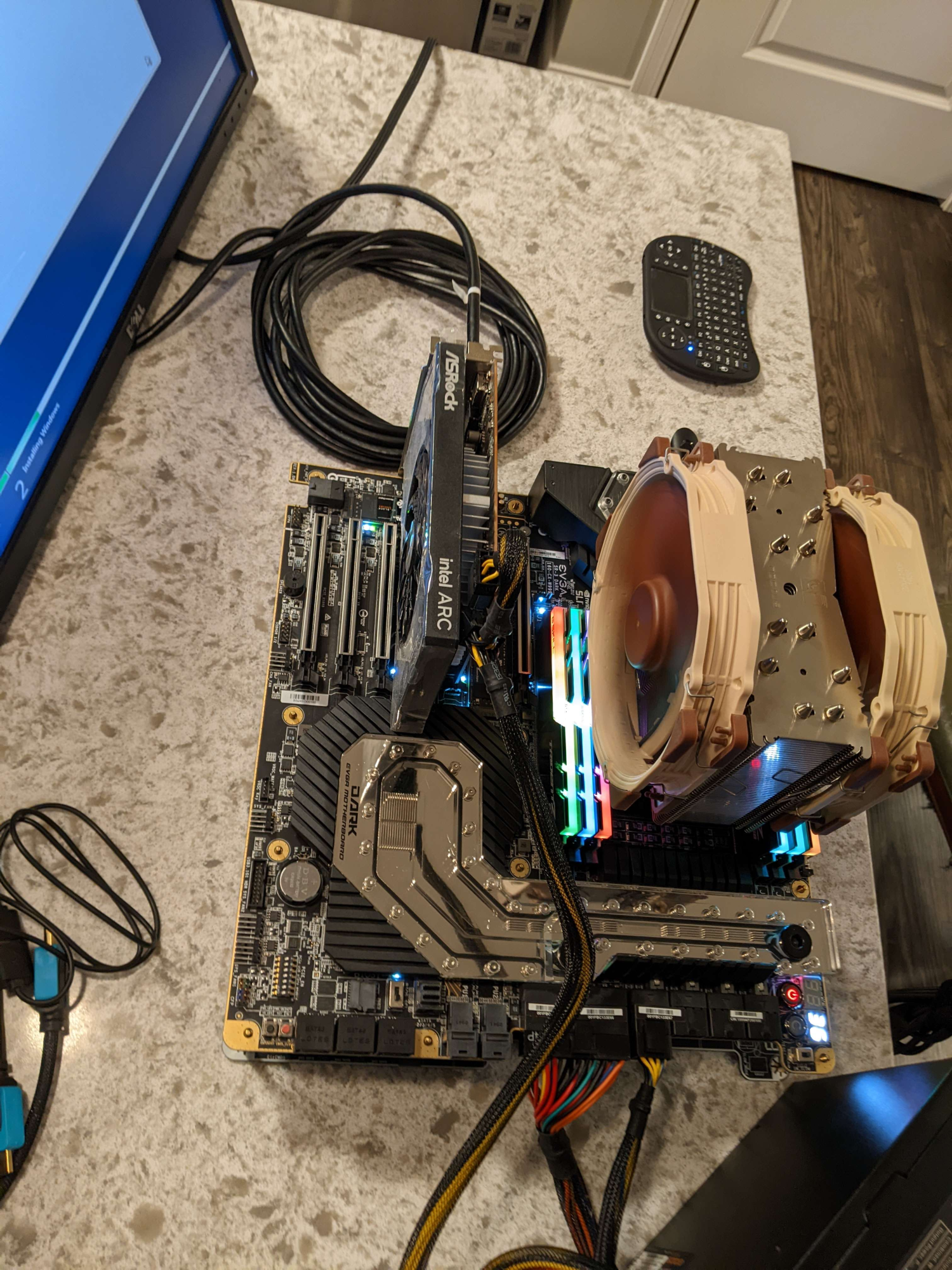

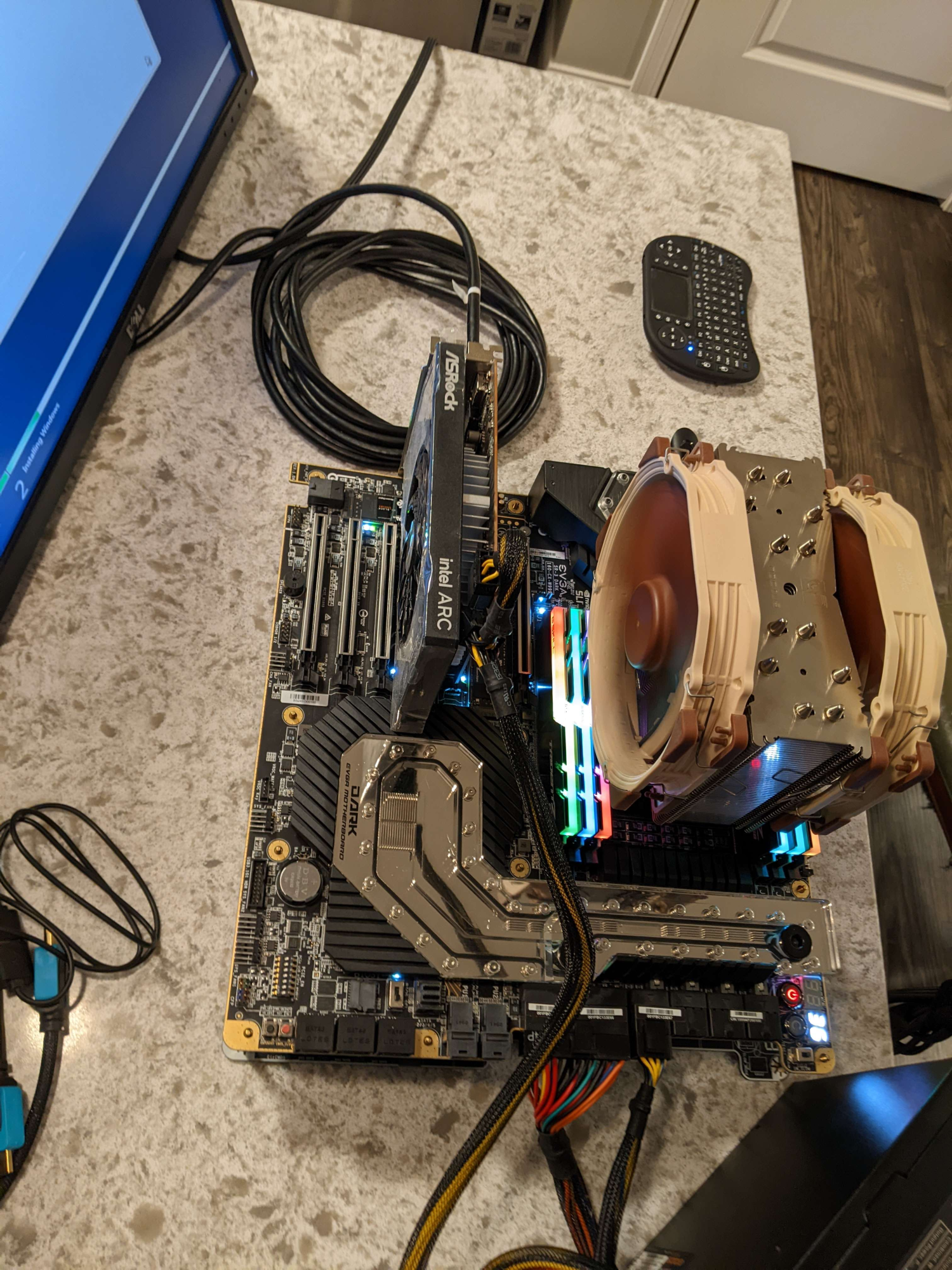

Here is a pic from when i was pretesting the hardware.

Purpose: This machines intended use is a server VMs that require really fast CPUs and storage for testing VMs between regular server and a super high frequency CPU/fast storage to see how it changes or if it does. I selected hardware that is versatle for me in the way of this use or if i take it down i can use it as another system or can have OC fun with it. I wont ever get rid of it so if i stop using it then i will just display the board on a shelf. This is also my first foray into watercooling in the enterprise and/or homelab. So this is also wrapping my mind around that and trying to plan for it and scenarios if something goes sideways.

Status: Some parts on hand, acquiring parts, planning measuring.

PC Parts List

Case: Alphacool ES 4u Rackmount Case

CPU: Intel Xeon W-7175x 28c/56t Processor

MB: EVGA SR3 Dark Motherboard

RAM: 6x16GB G Skill Trident Z 3600 DDR4

GPU: Asrock Arc 380

Boot SSD: Micron 256GB M.2

VM Storage: Asus Hyper M2 Card/ 4x WD Black SN850x 1TB in Raid 10

PSU: Corsair HX1000i

NIC: Intel XL710-QDA2 Network Card

WC Parts List

CPU Block: EKWB Annihilator Pro

Radiator: Alphacool XT45 360

Reservoir: Alphacool ES 4u Front res with D5 top

Pump: Either XSPC D5 with sata power or Optimus/Xylem D5 with sata power

Fittings: Bitspower Black. Still working out the details on this as im unsure if im going to run the cpu block & motherboard block in series or parallel.

Tubing: EPDM 1/2x3/4

Fans: Noctua NF-F12 Industrial 3000 x8

I got the case in after a long wait for restock at Performance PCs.

Here is a pic from when i was pretesting the hardware.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)