erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,942

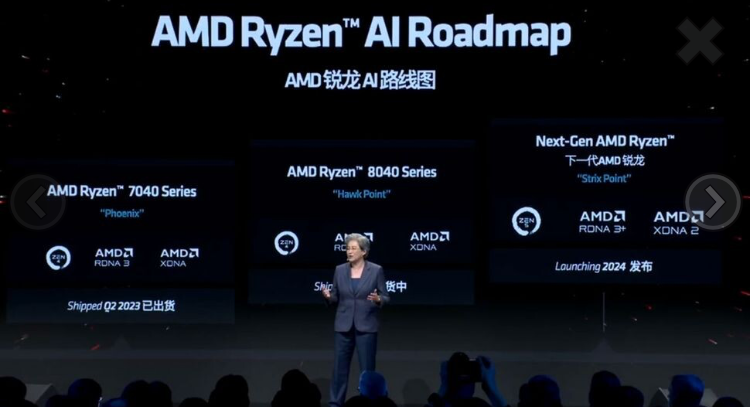

Is Strix Point already known to the collective here?

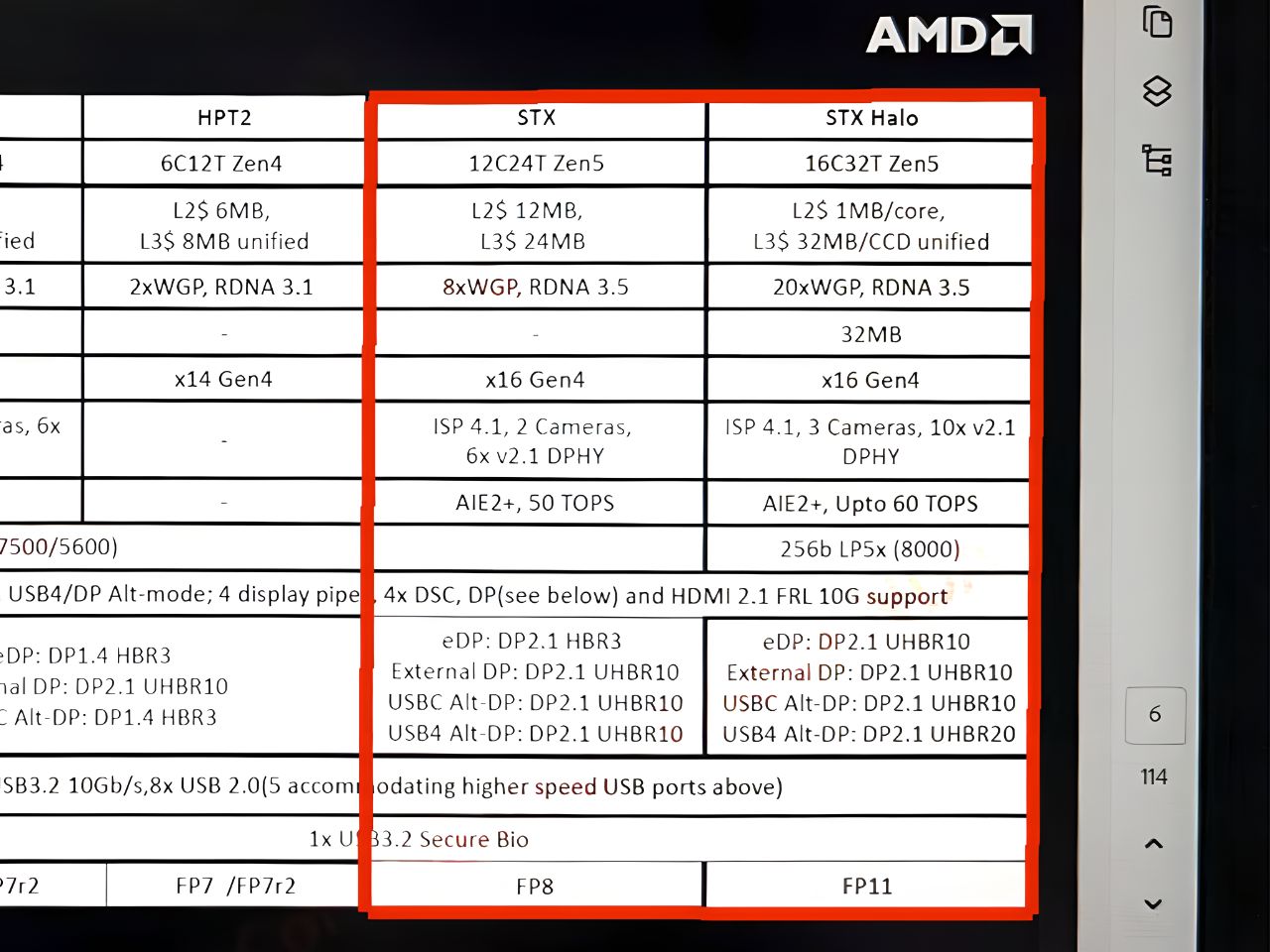

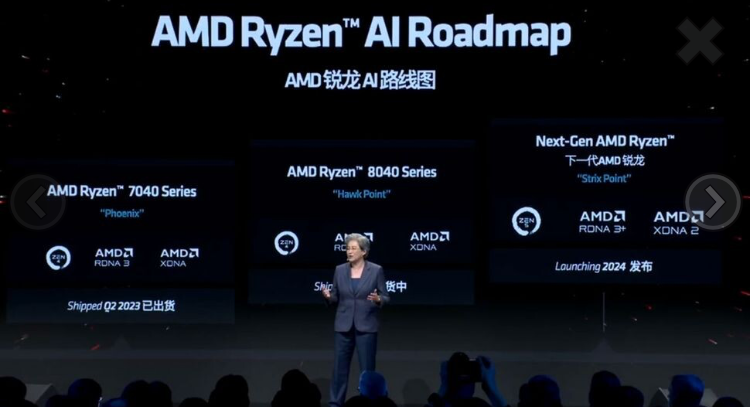

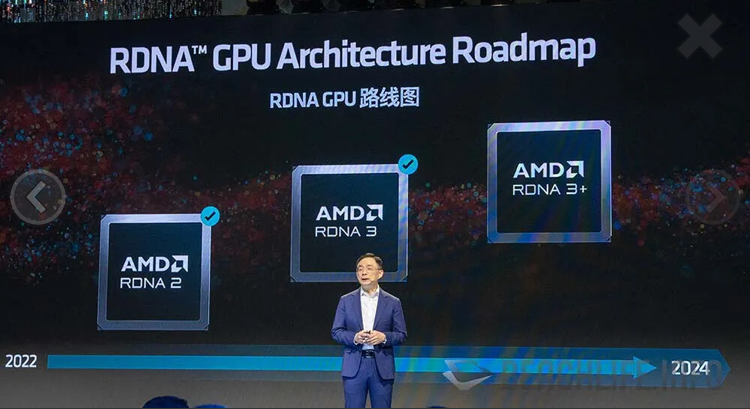

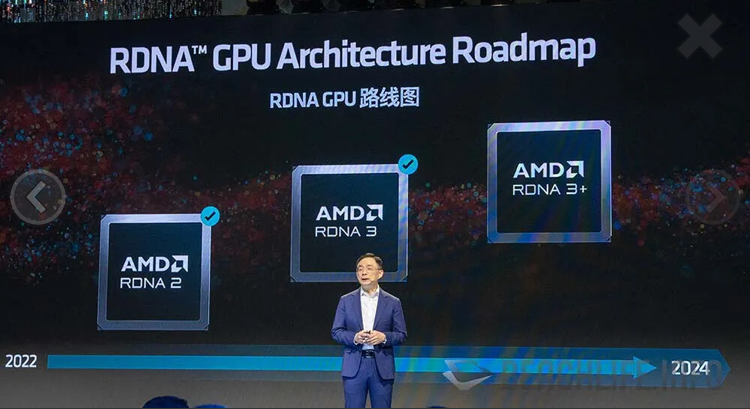

“AMD's SVP of GPU Technology and Engineering R&D, David Wang, was next on stage to dive a little deeper into the newly official RDNA 3+ and XDNA 2 architectures. It really was just a little deeper, as he mainly talked about the current-gen Hawk Point. For example, perhaps the biggest thing we learned about RDNA 3+ was its name... Previously we have reported on sightings of RDNA 3.5 drivers, but now it looks like the GPU series also sometimes identified as GFX115X will be dubbed RDNA 3+. Without a major version number update, we expect only slight tweaks to the now-familiar RDNA 3 in the upcoming Hawk Point APUs.”

Source: https://www.tomshardware.com/pc-com...egrating-zen-5-rdna-3-and-xdna-2-architecture

“AMD's SVP of GPU Technology and Engineering R&D, David Wang, was next on stage to dive a little deeper into the newly official RDNA 3+ and XDNA 2 architectures. It really was just a little deeper, as he mainly talked about the current-gen Hawk Point. For example, perhaps the biggest thing we learned about RDNA 3+ was its name... Previously we have reported on sightings of RDNA 3.5 drivers, but now it looks like the GPU series also sometimes identified as GFX115X will be dubbed RDNA 3+. Without a major version number update, we expect only slight tweaks to the now-familiar RDNA 3 in the upcoming Hawk Point APUs.”

Source: https://www.tomshardware.com/pc-com...egrating-zen-5-rdna-3-and-xdna-2-architecture

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)