erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 11,015

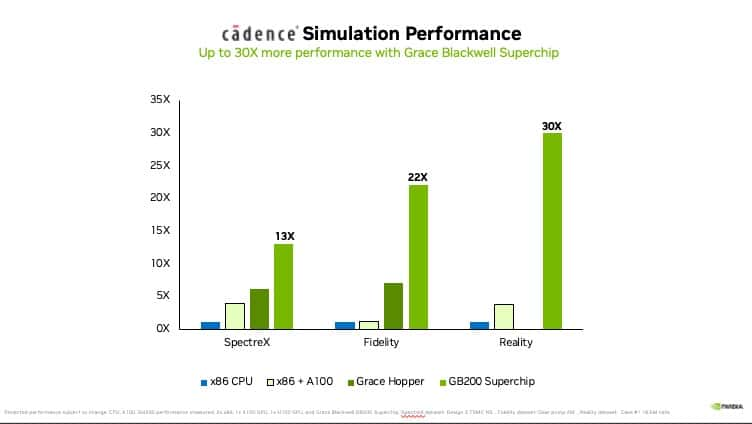

"NVIDIA has shared more performance statistics of its next-gen Blackwell GPU architecture which has taken the industry by storm. The company shared several metrics including its science, AI, & simulation results versus the outgoing Hopper chips and competing x86 CPUs when using Grace-powered Superchip modules.

NVIDIA's Monumental Performance Gains With Blackwell GPUs Aren't Just Limited To AI, Science & Simulation See Huge Boost Too

In a new blog post, NVIDIA has shared how Blackwell GPUs are going to add more performance to the research segment which includes Quantum Computing, Drug Discovery, Fusion Energy, Physics-based simulations, scientific computing, & more. When the architecture was originally announced at GTC 2024, the company showcased some big numbers but we have yet to get a proper look at the architecture itself. While we wait for that, the company has more figures for us to consume."

Source: https://wccftech.com/nvidia-blackwe...0x-faster-simulation-science-18x-faster-cpus/

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)